一、Web界面部署

1.官方文件dashboard部署

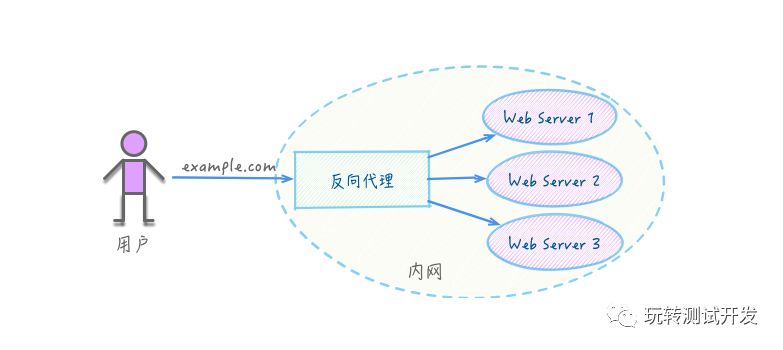

说明:官方项目是使用的ClusterIP方式暴露服务,不利于我们进行访问验证,

官方使用kubectl proxy

获取recommended.yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yamlwget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yamlwget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

拉取不下来试试添加

echo "151.101.108.133 raw.githubusercontent.com" >> /etc/hostsecho "151.101.108.133 raw.githubusercontent.com" >> /etc/hostsecho "151.101.108.133 raw.githubusercontent.com" >> /etc/hosts

因git网址有时候获取不到,可以在gitee上查找kubernetes-dashboard。

拉取镜像

docker pull kubernetesui/dashboard:v2.0.3 docker pull kubernetesui/metrics-scraper:v1.0.4docker pull kubernetesui/dashboard:v2.0.3 docker pull kubernetesui/metrics-scraper:v1.0.4docker pull kubernetesui/dashboard:v2.0.3 docker pull kubernetesui/metrics-scraper:v1.0.4

部署

[root@master01 dashboard]# kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created[root@master01 dashboard]# kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created[root@master01 dashboard]# kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created

查看状态

kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-555fc8cc5c-b6pvw 1/1 Running 1 6d1h 192.168.241.68 master01 <none> <none> kube-system calico-node-bhbrt 1/1 Running 0 16m 192.168.1.113 node01 <none> <none> kube-system calico-node-lgttw 1/1 Running 0 16m 192.168.1.112 master01 <none> <none> kube-system coredns-66bff467f8-bfkd7 1/1 Running 3 6d21h 192.168.241.67 master01 <none> <none> kube-system coredns-66bff467f8-qc6cw 1/1 Running 3 6d21h 192.168.241.66 master01 <none> <none> kube-system etcd-master01 1/1 Running 48 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-apiserver-master01 1/1 Running 50 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-controller-manager-master01 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-proxy-dqtvt 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-proxy-t986s 1/1 Running 0 6d21h 192.168.1.113 node01 <none> <none> kube-system kube-scheduler-master01 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-6b4884c9d5-lb9tv 1/1 Running 0 30m 192.168.241.69 master01 <none> <none> kubernetes-dashboard kubernetes-dashboard-5fd6f47c48-fk56r 1/1 Running 0 30m 192.168.241.70 master01 <none> <none>kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-555fc8cc5c-b6pvw 1/1 Running 1 6d1h 192.168.241.68 master01 <none> <none> kube-system calico-node-bhbrt 1/1 Running 0 16m 192.168.1.113 node01 <none> <none> kube-system calico-node-lgttw 1/1 Running 0 16m 192.168.1.112 master01 <none> <none> kube-system coredns-66bff467f8-bfkd7 1/1 Running 3 6d21h 192.168.241.67 master01 <none> <none> kube-system coredns-66bff467f8-qc6cw 1/1 Running 3 6d21h 192.168.241.66 master01 <none> <none> kube-system etcd-master01 1/1 Running 48 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-apiserver-master01 1/1 Running 50 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-controller-manager-master01 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-proxy-dqtvt 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-proxy-t986s 1/1 Running 0 6d21h 192.168.1.113 node01 <none> <none> kube-system kube-scheduler-master01 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-6b4884c9d5-lb9tv 1/1 Running 0 30m 192.168.241.69 master01 <none> <none> kubernetes-dashboard kubernetes-dashboard-5fd6f47c48-fk56r 1/1 Running 0 30m 192.168.241.70 master01 <none> <none>kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-555fc8cc5c-b6pvw 1/1 Running 1 6d1h 192.168.241.68 master01 <none> <none> kube-system calico-node-bhbrt 1/1 Running 0 16m 192.168.1.113 node01 <none> <none> kube-system calico-node-lgttw 1/1 Running 0 16m 192.168.1.112 master01 <none> <none> kube-system coredns-66bff467f8-bfkd7 1/1 Running 3 6d21h 192.168.241.67 master01 <none> <none> kube-system coredns-66bff467f8-qc6cw 1/1 Running 3 6d21h 192.168.241.66 master01 <none> <none> kube-system etcd-master01 1/1 Running 48 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-apiserver-master01 1/1 Running 50 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-controller-manager-master01 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-proxy-dqtvt 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kube-system kube-proxy-t986s 1/1 Running 0 6d21h 192.168.1.113 node01 <none> <none> kube-system kube-scheduler-master01 1/1 Running 2 6d21h 192.168.1.112 master01 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-6b4884c9d5-lb9tv 1/1 Running 0 30m 192.168.241.69 master01 <none> <none> kubernetes-dashboard kubernetes-dashboard-5fd6f47c48-fk56r 1/1 Running 0 30m 192.168.241.70 master01 <none> <none>

注:这里官方文件部署如果kubernetes-dashboard报错,node节点查看日志报 dial tcp 10.96.0.1:443: i/o timeout,需要修改recommended.yaml文件,使其部署在master节点上:

spec: nodeSelector: type: master containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.0.3 imagePullPolicy: IfNotPresent ports: - containerPort: 8443 protocol: TCPspec: nodeSelector: type: master containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.0.3 imagePullPolicy: IfNotPresent ports: - containerPort: 8443 protocol: TCPspec: nodeSelector: type: master containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.0.3 imagePullPolicy: IfNotPresent ports: - containerPort: 8443 protocol: TCP

master上执行:会占用终端,也可后台启动

[root@master01 ~]# kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'[root@master01 ~]# kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'[root@master01 ~]# kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'

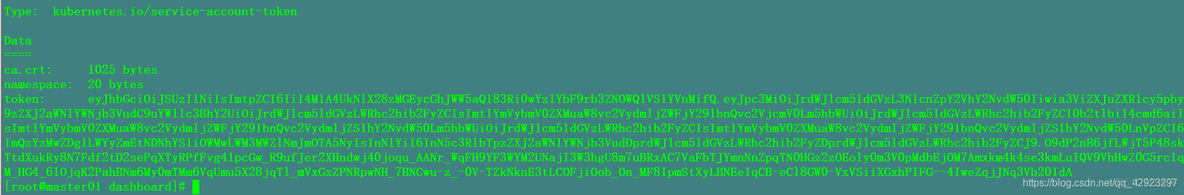

获取Token:

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

复制登录

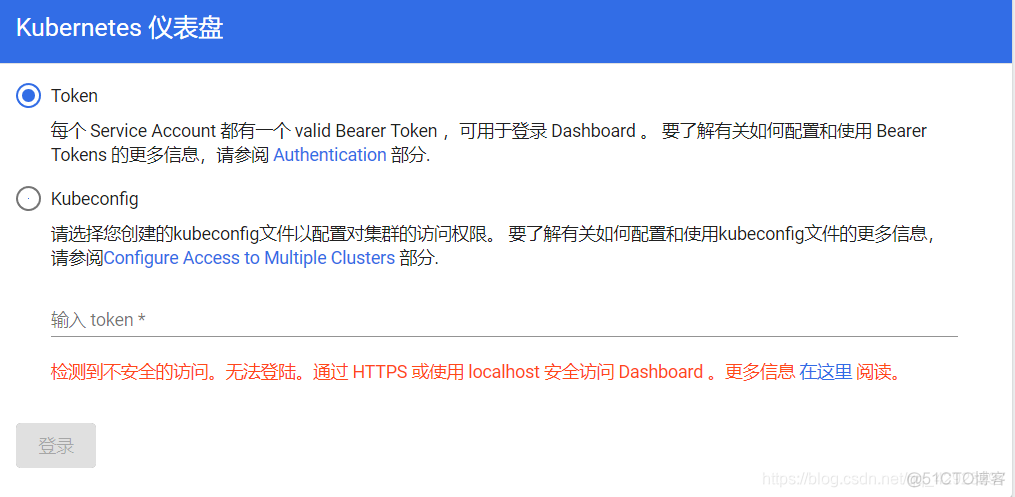

这里如果使用代理:

kubectl proxy –address=‘0.0.0.0’ –accept-hosts=’^*$’

则只能本地访问,外部访问会报:

还有一种方式就是端口转发:

执行命令:

kubectl port-forward --namespace kubernetes-dashboard --address 0.0.0.0 service/kubernetes-dashboard 443kubectl port-forward --namespace kubernetes-dashboard --address 0.0.0.0 service/kubernetes-dashboard 443kubectl port-forward --namespace kubernetes-dashboard --address 0.0.0.0 service/kubernetes-dashboard 443

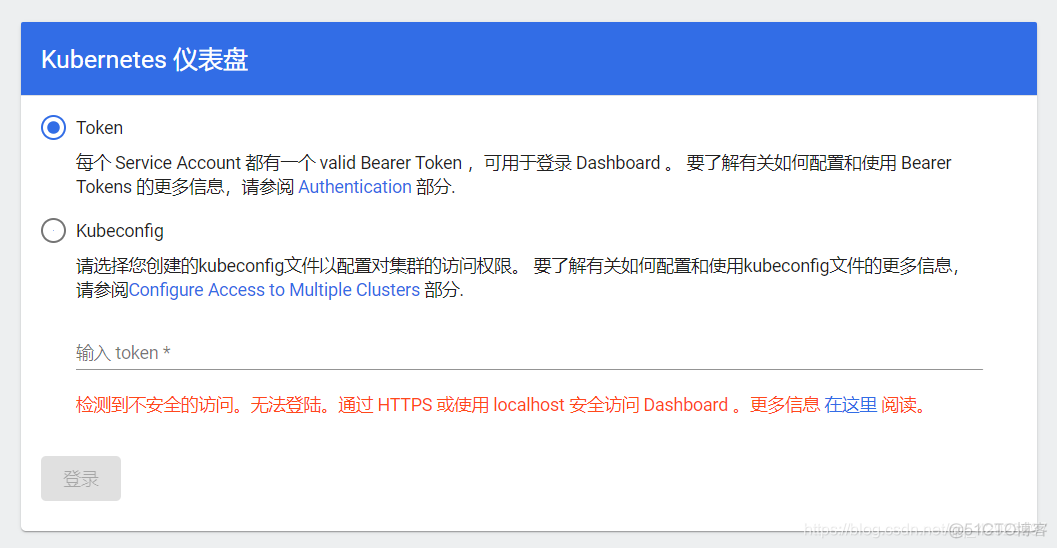

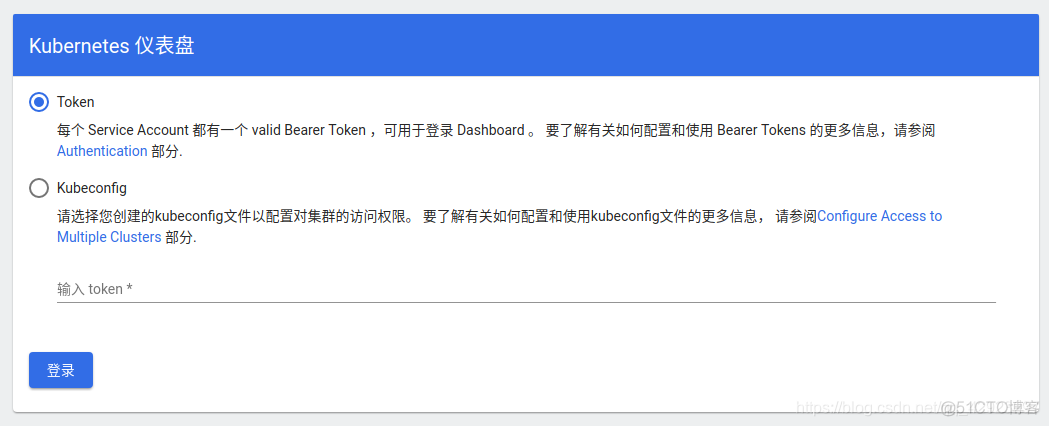

访问页面:

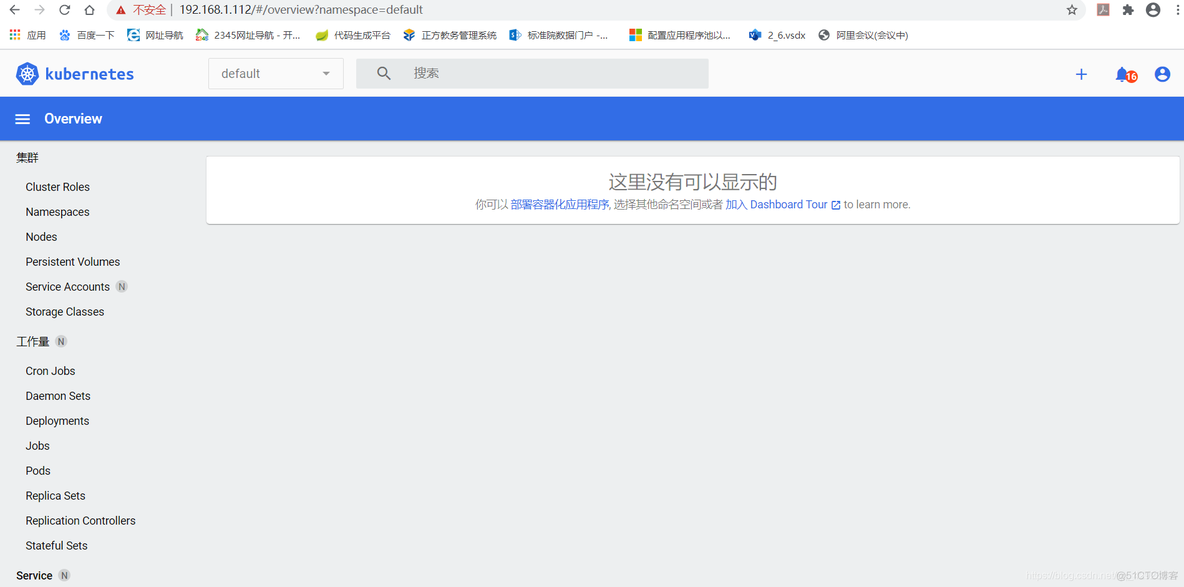

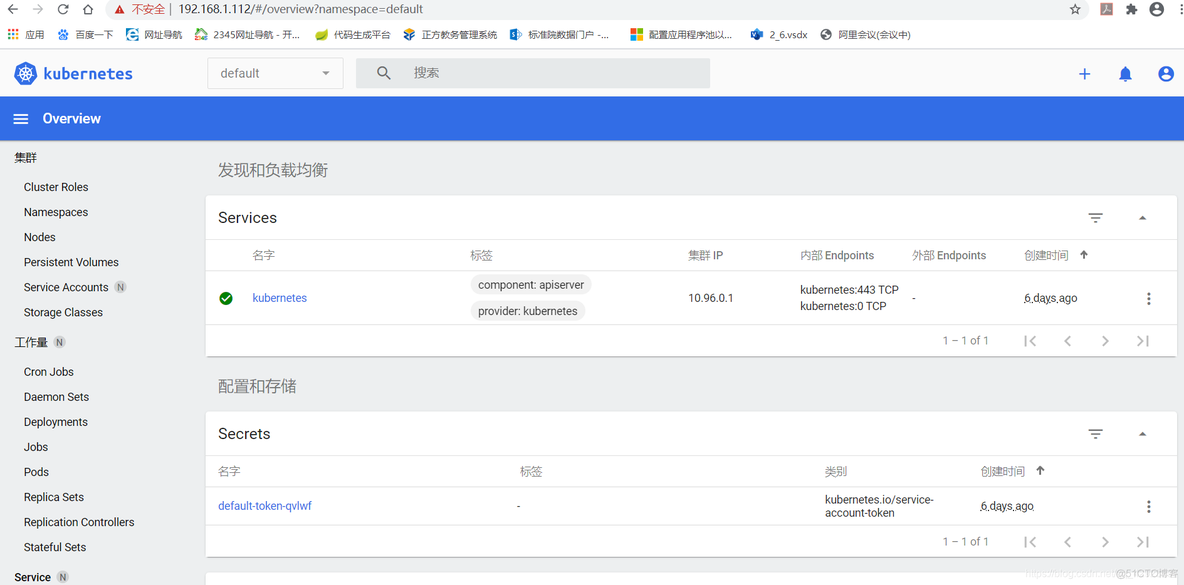

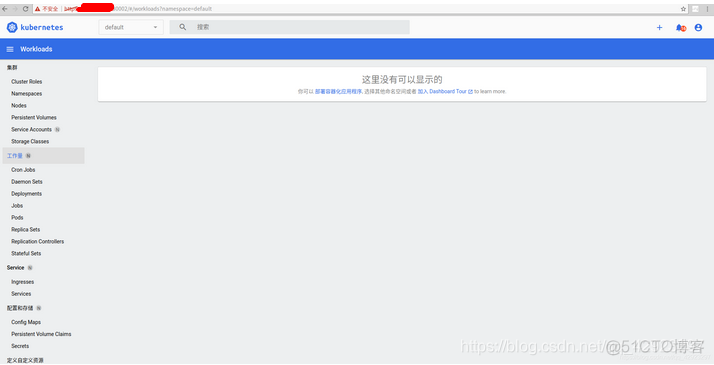

%20https://192.168.1.112/#/login 输入Token: 登录成功,但是这里没有显示任何东西,这是因为默认创建的token是kubernetes-dashboard命名空间。

登录成功,但是这里没有显示任何东西,这是因为默认创建的token是kubernetes-dashboard命名空间。

所以我们需要在kube-system命名空间创建一个用户,然后使用对应token登录即可(默认的官方配置文件已有)。

获取k8s的登录token:

kubectl -n kube-system describe $(kubectl -n kube-system get secret -n kube-system -o name | grep namespace) | grep tokenkubectl -n kube-system describe $(kubectl -n kube-system get secret -n kube-system -o name | grep namespace) | grep tokenkubectl -n kube-system describe $(kubectl -n kube-system get secret -n kube-system -o name | grep namespace) | grep token

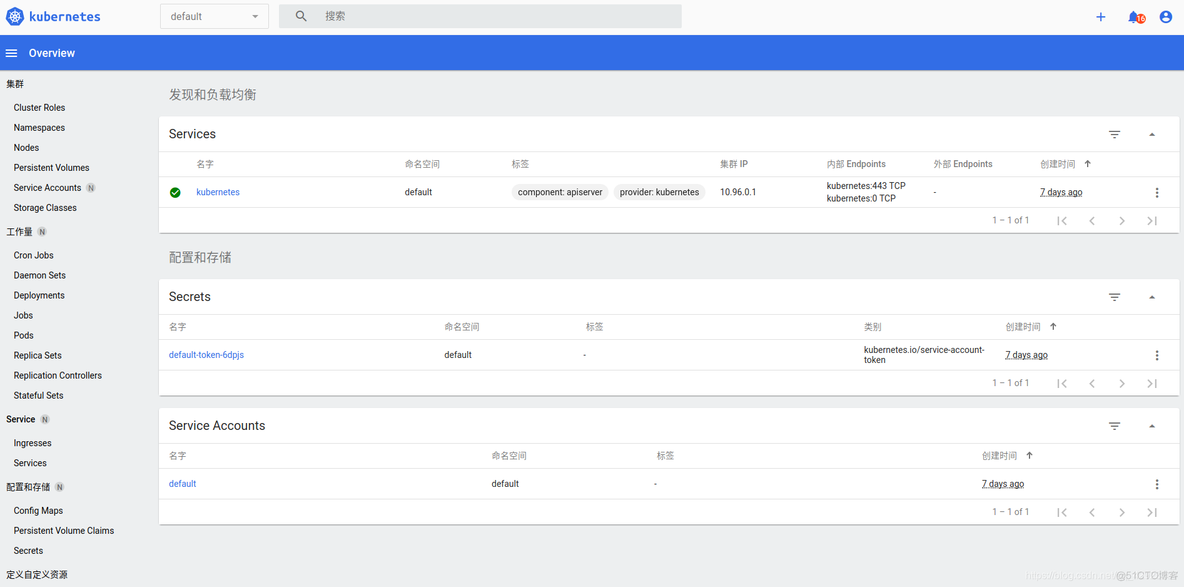

重新登录:

2.修改官方文件部署(使用NodePort方式,这里换了一个环境,是一台单节点)

还是先下载官方部署文件

recommended.yaml

设置master可以部署pod

kubectl taint nodes --all node-role.kubernetes.io/master-kubectl taint nodes --all node-role.kubernetes.io/master-kubectl taint nodes --all node-role.kubernetes.io/master-

如果不允许调度

kubectl taint nodes master node-role.kubernetes.io/master=:NoSchedulekubectl taint nodes master node-role.kubernetes.io/master=:NoSchedulekubectl taint nodes master node-role.kubernetes.io/master=:NoSchedule

污点可选参数

NoSchedule: 一定不能被调度

PreferNoSchedule: 尽量不要调度

NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

修改文件如下:

vim recommended_nodePort.yaml kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort #新增 ports: - port: 443 targetPort: 8443 nodePort: 30002 #新增 selector: k8s-app: kubernetes-dashboardvim recommended_nodePort.yaml kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort #新增 ports: - port: 443 targetPort: 8443 nodePort: 30002 #新增 selector: k8s-app: kubernetes-dashboardvim recommended_nodePort.yaml kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort #新增 ports: - port: 443 targetPort: 8443 nodePort: 30002 #新增 selector: k8s-app: kubernetes-dashboard

部署

[root@master dashboard]# kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created[root@master dashboard]# kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created[root@master dashboard]# kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created

查看服务

[root@master dashboard]# kubectl get pod,svc --all-namespaces -o wide[root@master dashboard]# kubectl get pod,svc --all-namespaces -o wide[root@master dashboard]# kubectl get pod,svc --all-namespaces -o wide

页面访问:

%20https://IP:端口 https://主节点IP:30002

获取token登录

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep kubernetes-dashboard-token | awk '{print $1}')kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep kubernetes-dashboard-token | awk '{print $1}')kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep kubernetes-dashboard-token | awk '{print $1}')

然后你会发现无法查看到系统资源,这是因为默认创建的token是kubernetes-dashboard命名空间。

所以我们需要在kube-system命名空间创建一个用户,然后使用对应token登录即可。

vim admin_user.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system --- #默认情况下,kubeadm创建集群时已经创建了admin角色,我们直接绑定即可 apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-systemvim admin_user.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system --- #默认情况下,kubeadm创建集群时已经创建了admin角色,我们直接绑定即可 apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-systemvim admin_user.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system --- #默认情况下,kubeadm创建集群时已经创建了admin角色,我们直接绑定即可 apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-system

部署

[root@master dashboard]# kubectl create -f admin_user.yaml serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created[root@master dashboard]# kubectl create -f admin_user.yaml serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created[root@master dashboard]# kubectl create -f admin_user.yaml serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created

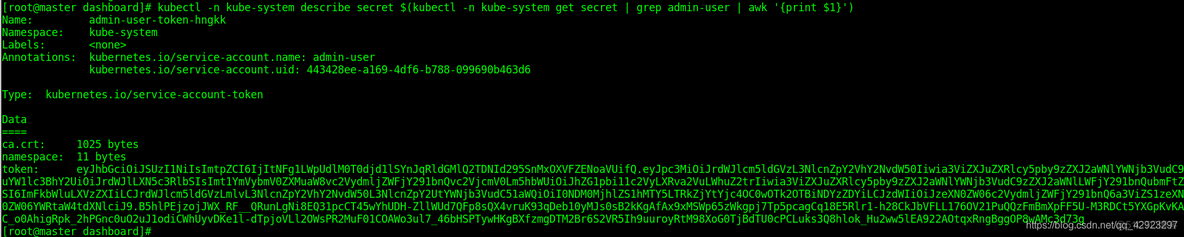

获取token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

登录

参考链接:

https://listenerri.com/2020/04/15/k8s-dashboard-%E6%97%A0%E6%B3%95%E7%99%BB%E9%99%86/

原文链接:https://blog.51cto.com/u_16099304/10116746