昨天重新装了一下虚拟机,发现好多都不记得了,记录一下,防止到时候又忘了

一.改名

sudo vim /etc/hostnamesudo vim /etc/hostnamesudo vim /etc/hostname

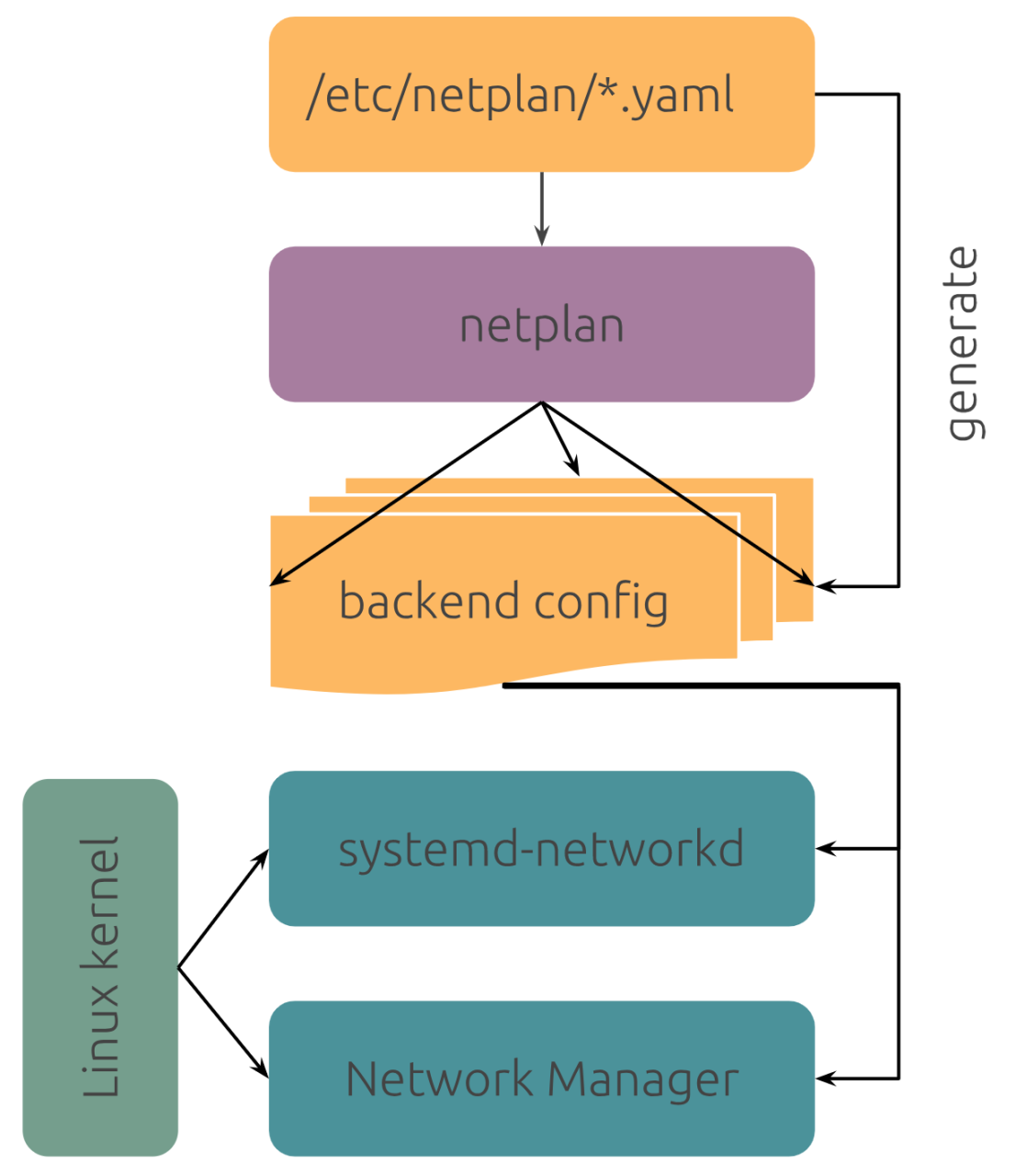

二.配置虚拟网卡

Ubuntu 18.04 LTS 已切换到 Netplan 来配置网络接口

路径

cd /etc/netplant/ 里面的.yaml文件cd /etc/netplant/ 里面的.yaml文件cd /etc/netplant/ 里面的.yaml文件

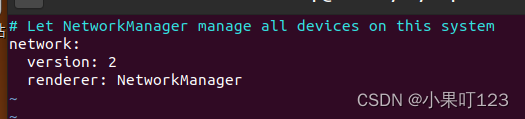

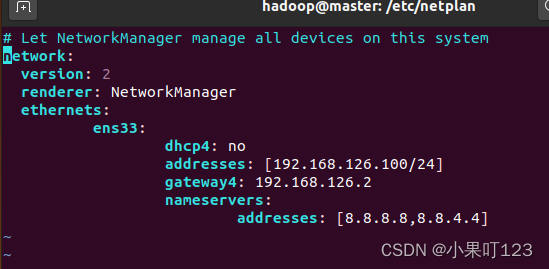

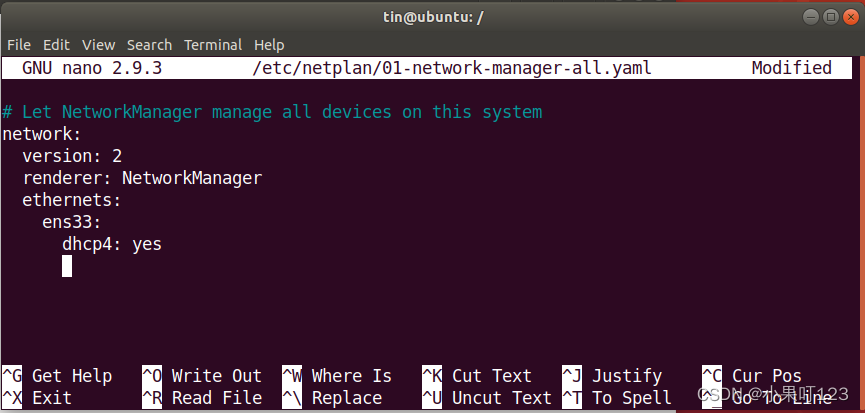

里面的文件打开长这样

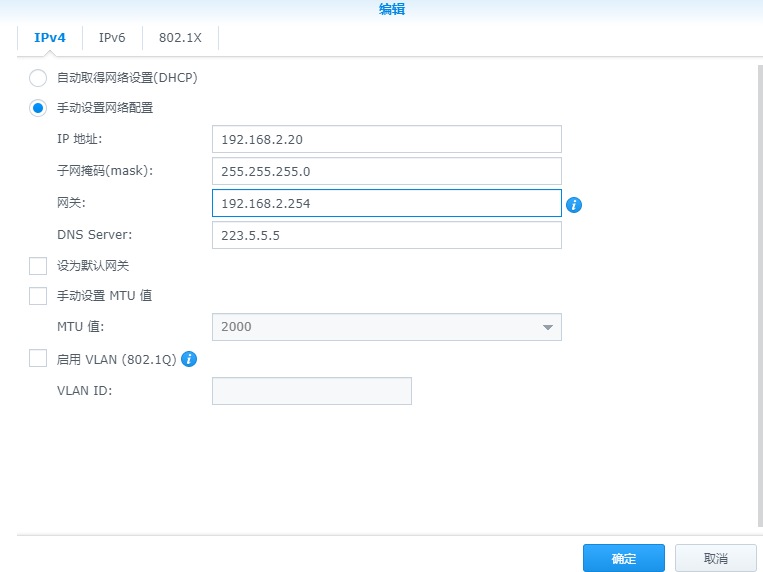

1.配置.yaml文件

network: Version: 2 Renderer: NetworkManager/ networkd ethernets: DEVICE_NAME: dhcp4: yes/no addresses: [IP_ADDRESS/NETMASK] gateway: GATEWAY nameservers: addresses: [NAMESERVER_1, NAMESERVER_2]network: Version: 2 Renderer: NetworkManager/ networkd ethernets: DEVICE_NAME: dhcp4: yes/no addresses: [IP_ADDRESS/NETMASK] gateway: GATEWAY nameservers: addresses: [NAMESERVER_1, NAMESERVER_2]network: Version: 2 Renderer: NetworkManager/ networkd ethernets: DEVICE_NAME: dhcp4: yes/no addresses: [IP_ADDRESS/NETMASK] gateway: GATEWAY nameservers: addresses: [NAMESERVER_1, NAMESERVER_2]

DEVICE_NAME:接口的名称。

dhcp4:是或否取决于动态或静态 IP 寻址

addresses:设备的 IP 地址以前缀表示法。不要使用网络掩码。

gateway:连接到外部网络的网关 IP 地址

nameservers : DNS 名称服务器的地址

请注意,Yaml 文件的缩进相当严格。使用空格来缩进,而不是制表符。否则,您将遇到错误。

配置完成之后的样子

network: version: 2 renderer: NetworkManager ethernets: ens33: dhcp4: no addresses: [192.168.126.100/24] gateway4: 192.168.126.2 nameservers: addresses: [8.8.8.8,8.8.4.4]network: version: 2 renderer: NetworkManager ethernets: ens33: dhcp4: no addresses: [192.168.126.100/24] gateway4: 192.168.126.2 nameservers: addresses: [8.8.8.8,8.8.4.4]network: version: 2 renderer: NetworkManager ethernets: ens33: dhcp4: no addresses: [192.168.126.100/24] gateway4: 192.168.126.2 nameservers: addresses: [8.8.8.8,8.8.4.4]

—–使用动态IP(没用过)—

2.测试并使其生效

测试

sudo netplan trysudo netplan trysudo netplan try

如果没有问题,它将返回配置接受消息。如果配置文件未通过测试,它将恢复为以前的工作配置。

应用配置 现在通过以 sudo 身份运行以下命令来应用新配置:

sudo netplan –d apply -d可以省略 为了看到错误在哪sudo netplan –d apply -d可以省略 为了看到错误在哪sudo netplan –d apply -d可以省略 为了看到错误在哪

重启网络服务

sudo systemctl restart network-managersudo systemctl restart network-managersudo systemctl restart network-manager

测试有没有生效可以

ip a ping www.baidu.comip a ping www.baidu.comip a ping www.baidu.com

三.链接Xhell

先试试能不能直接连接

不能的话

1、关闭防火墙 sudo ufw disable 2、开发22端口 sudo ufw allow 22 3、最后安装ssh sudo apt-get install openssh-server1、关闭防火墙 sudo ufw disable 2、开发22端口 sudo ufw allow 22 3、最后安装ssh sudo apt-get install openssh-server1、关闭防火墙 sudo ufw disable 2、开发22端口 sudo ufw allow 22 3、最后安装ssh sudo apt-get install openssh-server

再去连接

###

四.ssh免密

准备工作

在 sudo vim /etc/hosts 加上配置 三台全部要

sudo vim /etc/hosts 192.168.126.100 master 192.168.126.101 slaver1 192.168.126.102 slaver2sudo vim /etc/hosts 192.168.126.100 master 192.168.126.101 slaver1 192.168.126.102 slaver2sudo vim /etc/hosts 192.168.126.100 master 192.168.126.101 slaver1 192.168.126.102 slaver2

三台机器全部配置完成之后,按ESC和:wq保存退出 使用 sudo apt-get install openssh-server 安装ssh

sudo apt-get install openssh-serversudo apt-get install openssh-serversudo apt-get install openssh-server

开始做免密

在三台机器每台做一遍

Ssh-keygen 创建一个钥匙 Ssh-copy-id mymaster 将公匙传到mymaster里 ssh-copy-id slaver1 ssh-copy-id slaver2Ssh-keygen 创建一个钥匙 Ssh-copy-id mymaster 将公匙传到mymaster里 ssh-copy-id slaver1 ssh-copy-id slaver2Ssh-keygen 创建一个钥匙 Ssh-copy-id mymaster 将公匙传到mymaster里 ssh-copy-id slaver1 ssh-copy-id slaver2

ssh slaver1 进入slaver1 ssh slaver2 进入slaver2 如果想退出输入exit即可

五.安装haoop和jdk

准备工作

1.用xftp把两个文件传过去

-

hadoop-2.7.1.tar.gz

-

jdk-8u162-linux-x64.tar.gz

2.解压、改名、授权

sudo tar zxf hadoop-3.1.3.tar.gz -C /usr/local/src/ sudo mv hadoop-3.1.3/ hadoop sudo chown -R hadoop:hadoop hadoop/ sudo tar zxf jdk-8u231-linux-x64.tar.gz -C /usr/local/src/ sudo mv jdk1.8.0_231/ jdk1.8 sudo chown -R hadoop:hadoop jdk1.8 ll 查看权限sudo tar zxf hadoop-3.1.3.tar.gz -C /usr/local/src/ sudo mv hadoop-3.1.3/ hadoop sudo chown -R hadoop:hadoop hadoop/ sudo tar zxf jdk-8u231-linux-x64.tar.gz -C /usr/local/src/ sudo mv jdk1.8.0_231/ jdk1.8 sudo chown -R hadoop:hadoop jdk1.8 ll 查看权限sudo tar zxf hadoop-3.1.3.tar.gz -C /usr/local/src/ sudo mv hadoop-3.1.3/ hadoop sudo chown -R hadoop:hadoop hadoop/ sudo tar zxf jdk-8u231-linux-x64.tar.gz -C /usr/local/src/ sudo mv jdk1.8.0_231/ jdk1.8 sudo chown -R hadoop:hadoop jdk1.8 ll 查看权限

3.配置环境变量

sudo vim ~/.bashrc export JAVA_HOME=/usr/local/src/jdk1.8 export PATH=$PATH:$JAVA_HOME/bin export HADOOP_HOME=/usr/local/src/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin source ~/.bashrcsudo vim ~/.bashrc export JAVA_HOME=/usr/local/src/jdk1.8 export PATH=$PATH:$JAVA_HOME/bin export HADOOP_HOME=/usr/local/src/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin source ~/.bashrcsudo vim ~/.bashrc export JAVA_HOME=/usr/local/src/jdk1.8 export PATH=$PATH:$JAVA_HOME/bin export HADOOP_HOME=/usr/local/src/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin source ~/.bashrc

修改hadoop的配置文件

1.vim core-site.xml

<configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/src/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> </configuration><configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/src/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> </configuration><configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/src/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> </configuration>

2.hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/src/hadoop/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/src/hadoop/tmp/dfs/data</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>master:50090</value> </property> </configuration><configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/src/hadoop/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/src/hadoop/tmp/dfs/data</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>master:50090</value> </property> </configuration><configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/src/hadoop/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/src/hadoop/tmp/dfs/data</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>master:50090</value> </property> </configuration>

3.mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration><configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration><configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

4.yarn-site.xml

<configuration> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- Site specific YARN configuration properties --> </configuration><configuration> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- Site specific YARN configuration properties --> </configuration><configuration> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- Site specific YARN configuration properties --> </configuration>

5.slaves 或者 workers (如果有slaves文件就到这个文件里面修改,没有的话就到 workers)

将里面的内容改为

master slave1 slave2master slave1 slave2master slave1 slave2

6.hadoop-env.sh

在最后一行插入jdk的位置:

export JAVA_HOME=/usr/local/src/jdk1.8export JAVA_HOME=/usr/local/src/jdk1.8export JAVA_HOME=/usr/local/src/jdk1.8

把配置好的文件传到另外两台机器

scp core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml workers hadoop-env.sh slaver1:/usr/local/src/hadoop/etc/hadoop/ scp core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml workers hadoop-env.sh slaver2:/usr/local/src/hadoop/etc/hadoop/scp core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml workers hadoop-env.sh slaver1:/usr/local/src/hadoop/etc/hadoop/ scp core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml workers hadoop-env.sh slaver2:/usr/local/src/hadoop/etc/hadoop/scp core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml workers hadoop-env.sh slaver1:/usr/local/src/hadoop/etc/hadoop/ scp core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml workers hadoop-env.sh slaver2:/usr/local/src/hadoop/etc/hadoop/

删除hadoop的日志文件

rm -r logs/* rm -r tmp/*rm -r logs/* rm -r tmp/*rm -r logs/* rm -r tmp/*

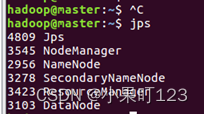

hadoop namenode -format 格式化刚刚配置的(有选项就选yes) 然后使用start-all.sh 重新启动服务 启动服务结束后输入jps,如下图一样是6个就成功啦

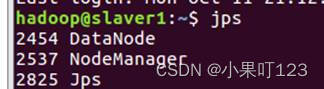

进入slaver1和slaver2输入jps,如下

原文链接:https://blog.csdn.net/m0_53504112/article/details/127916326