1.安装说明

本文章将演示二进制方式安装高可用k8s 1.17+,相对于其他版本,二进制安装方式并无太大区别,只需要区分每个组件版本的对应关系即可。

生产环境中,建议使用小版本大于5的Kubernetes版本,比如1.19.5以后的才可用于生产环境。

2.基本环境配置

表1-1 高可用Kubernetes集群规划

|

角色 |

机器名 |

机器配置 |

ip地址 |

安装软件 |

|

master1 |

k8s-master01.example.local |

2C4G |

172.31.3.101 |

chrony-client、containerd、kube-controller-manager、kube-scheduler、kube-apiserver、kubelet、kube-proxy、kubectl |

|

master2 |

k8s-master02.example.local |

2C4G |

172.31.3.102 |

chrony-client、containerd、kube-controller-manager、kube-scheduler、kube-apiserver、kubelet、kube-proxy、kubectl |

|

master3 |

k8s-master03.example.local |

2C4G |

172.31.3.103 |

chrony-client、containerd、kube-controller-manager、kube-scheduler、kube-apiserver、kubelet、kube-proxy、kubectl |

|

ha1 |

k8s-ha01.example.local |

2C2G |

172.31.3.104 |

chrony-server、haproxy、keepalived |

|

ha2 |

k8s-ha02.example.local |

2C2G |

172.31.3.105 |

chrony-server、haproxy、keepalived |

|

harbor1 |

k8s-harbor01.example.local |

2C2G |

172.31.3.106 |

chrony-client、docker、docker-compose、harbor |

|

harbor2 |

k8s-harbor02.example.local |

2C2G |

172.31.3.107 |

chrony-client、docker、docker-compose、harbor |

|

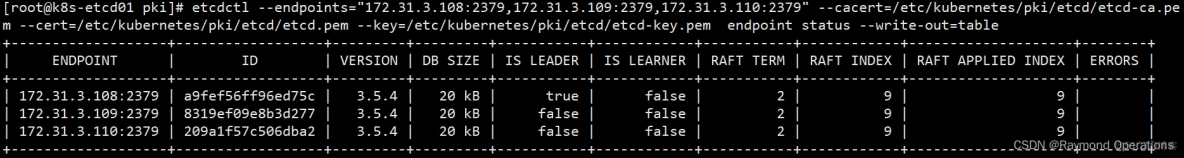

etcd1 |

k8s-etcd01.example.local |

2C2G |

172.31.3.108 |

chrony-client、etcd |

|

etcd2 |

k8s-etcd02.example.local |

2C2G |

172.31.3.109 |

chrony-client、etcd |

|

etcd3 |

k8s-etcd03.example.local |

2C2G |

172.31.3.110 |

chrony-client、etcd |

|

node1 |

k8s-node01.example.local |

2C4G |

172.31.3.111 |

chrony-client、containerd、kubelet、kube-proxy |

|

node2 |

k8s-node02.example.local |

2C4G |

172.31.3.112 |

chrony-client、containerd、kubelet、kube-proxy |

|

node3 |

k8s-node03.example.local |

2C4G |

172.31.3.113 |

chrony-client、containerd、kubelet、kube-proxy |

|

VIP,在ha01和ha02主机实现 |

172.31.3.188 |

软件版本信息和Pod、Service网段规划:

|

配置信息 |

备注 |

|

支持的操作系统版本 |

CentOS 7.9/stream 8、Rocky 8、Ubuntu 18.04/20.04 |

|

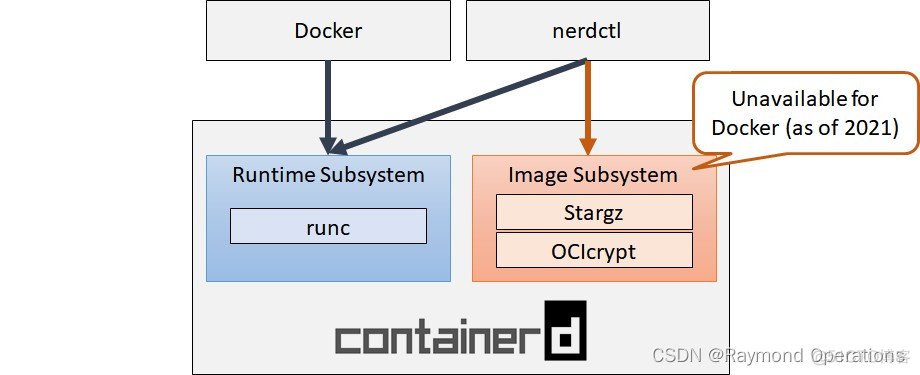

Container Runtime |

containerd v1.6.8 |

|

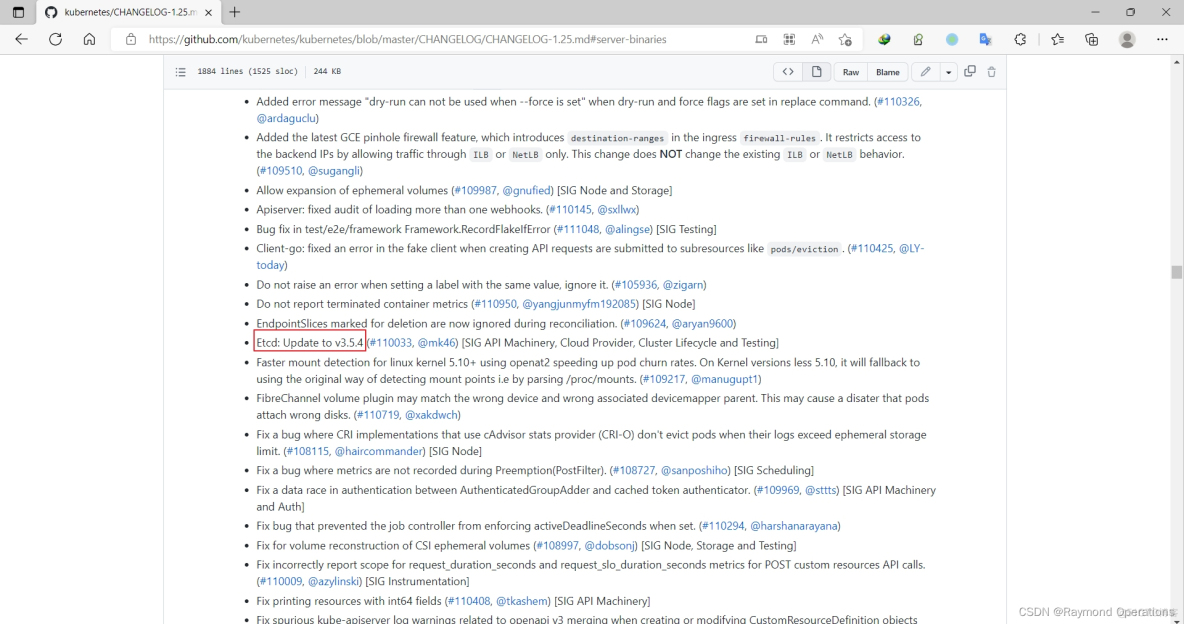

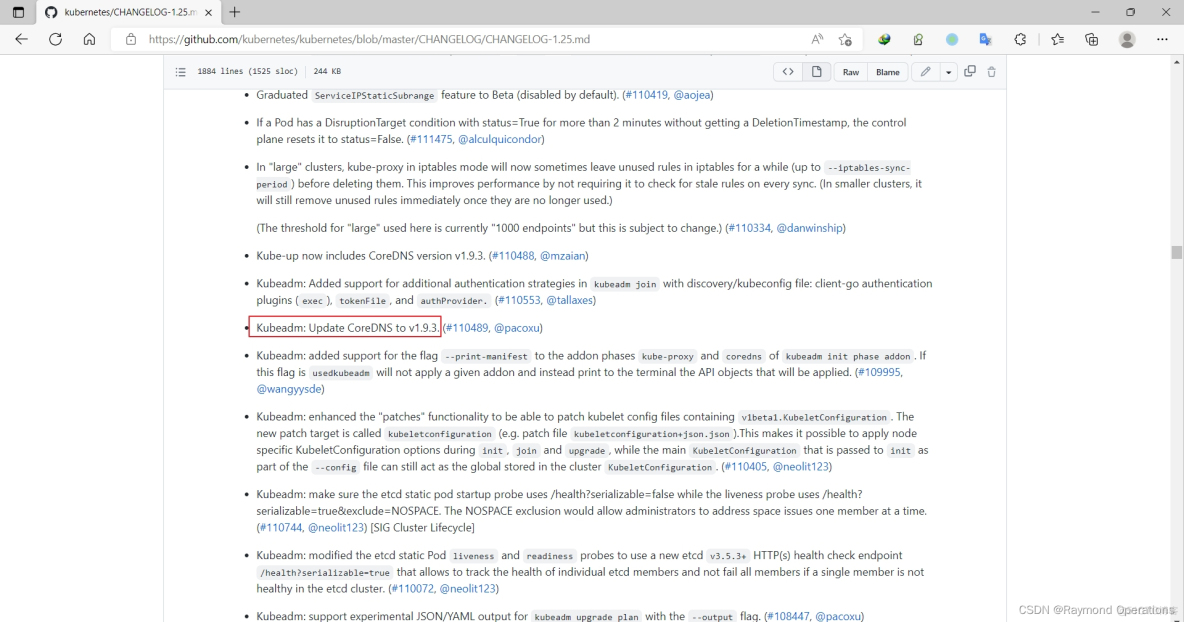

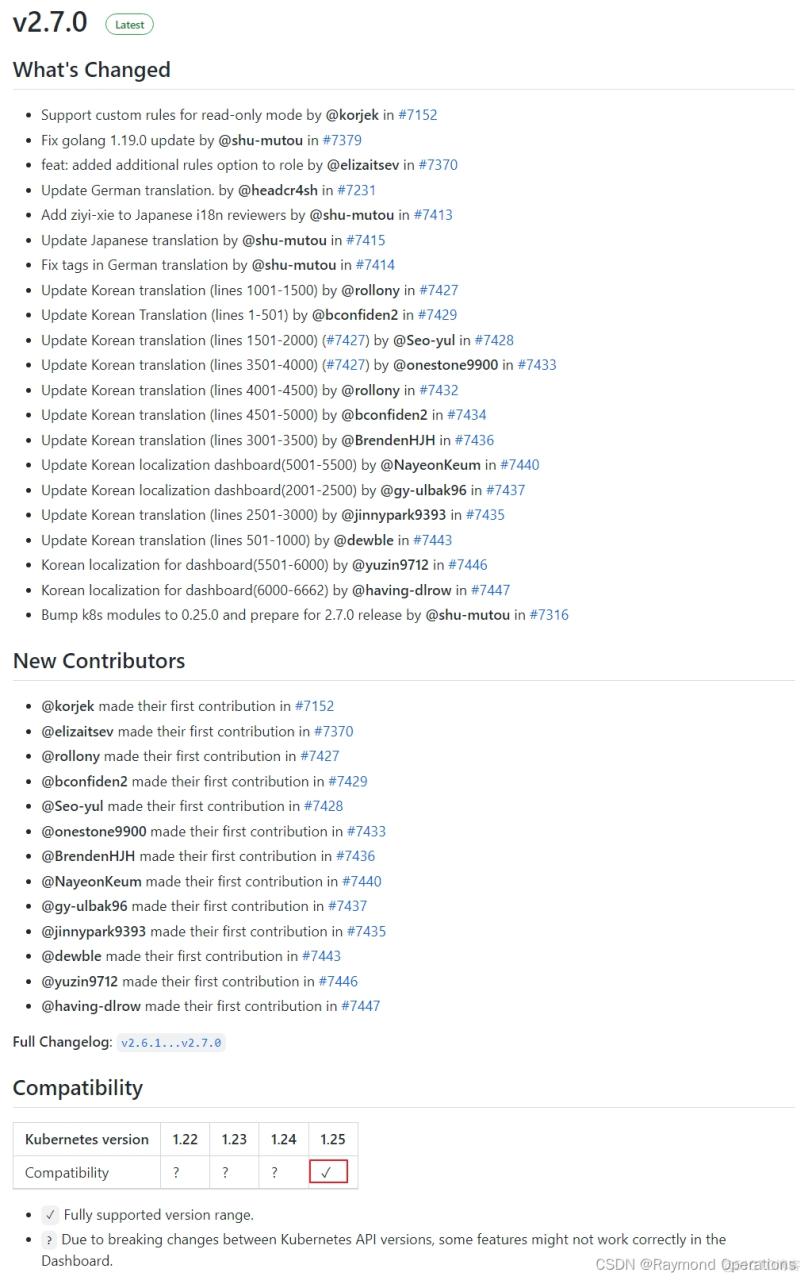

kubernetes版本 |

1.25.2 |

|

宿主机网段 |

172.31.0.0/21 |

|

Pod网段 |

192.168.0.0/12 |

|

Service网段 |

10.96.0.0/12 |

注意:

集群安装时会涉及到三个网段:

宿主机网段:就是安装k8s的服务器

Pod网段:k8s Pod的网段,相当于容器的IP

Service网段:k8s service网段,service用于集群容器通信。

service网段会设置为10.96.0.0/12

Pod网段会设置成192.168.0.0/12

宿主机网段可能是172.31.0.0/21

需要注意的是这三个网段不能有任何交叉。

比如如果宿主机的IP是10.105.0.x

那么service网段就不能是10.96.0.0/12,因为10.96.0.0/12网段可用IP是:

10.96.0.1 ~ 10.111.255.255

所以10.105是在这个范围之内的,属于网络交叉,此时service网段需要更换,

可以更改为192.168.0.0/16网段(注意如果service网段是192.168开头的子网掩码最好不要是12,最好为16,因为子网掩码是12他的起始IP为192.160.0.1 不是192.168.0.1)。

同样的道理,技术别的网段也不能重复。可以通过http://tools.jb51.net/aideddesign/ip_net_calc/计算:

所以一般的推荐是,直接第一个开头的就不要重复,比如你的宿主机是192开头的,那么你的service可以是10.96.0.0/12.

如果你的宿主机是10开头的,就直接把service的网段改成192.168.0.0/16

如果你的宿主机是172开头的,就直接把pod网段改成192.168.0.0/12

注意搭配,均为10网段、172网段、192网段的搭配,第一个开头数字不一样就免去了网段冲突的可能性,也可以减去计算的步骤。

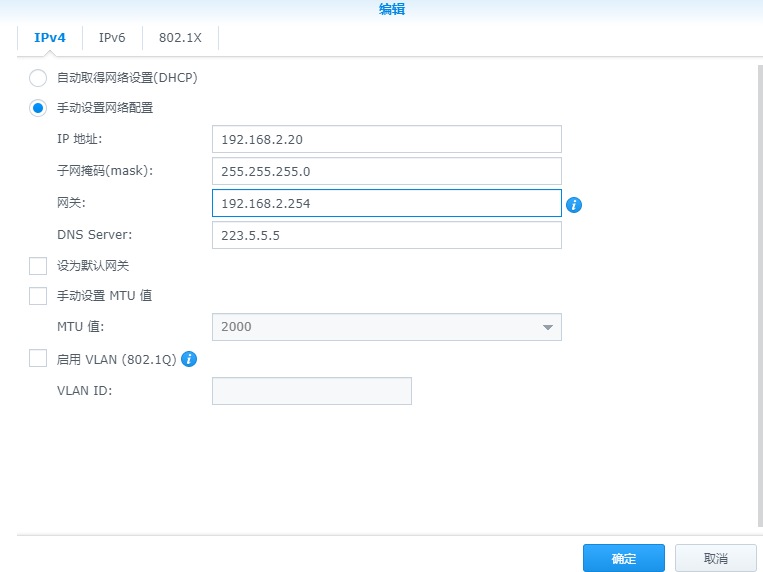

主机信息,服务器IP地址不能设置成dhcp,要配置成静态IP。

VIP(虚拟IP)不要和公司内网IP重复,首先去ping一下,不通才可用。VIP需要和主机在同一个局域网内!公有云的话,VIP为公有云的负载均衡的IP,比如阿里云的SLB地址,腾讯云的ELB地址,注意公有云的负载均衡都是内网的负载均衡。

2.1 设置ip地址

各节点设置ip地址格式如下:

#CentOS [root@k8s-master01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 NAME=eth0 BOOTPROTO=none ONBOOT=yes IPADDR=172.31.3.101 PREFIX=21 GATEWAY=172.31.0.2 DNS1=223.5.5.5 DNS2=180.76.76.76 #Ubuntu root@k8s-master01:~# cat /etc/netplan/01-netcfg.yaml network: version: 2 renderer: networkd ethernets: eth0: addresses: [172.31.3.101/21] gateway4: 172.31.0.2 nameservers: addresses: [223.5.5.5, 180.76.76.76]#CentOS [root@k8s-master01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 NAME=eth0 BOOTPROTO=none ONBOOT=yes IPADDR=172.31.3.101 PREFIX=21 GATEWAY=172.31.0.2 DNS1=223.5.5.5 DNS2=180.76.76.76 #Ubuntu root@k8s-master01:~# cat /etc/netplan/01-netcfg.yaml network: version: 2 renderer: networkd ethernets: eth0: addresses: [172.31.3.101/21] gateway4: 172.31.0.2 nameservers: addresses: [223.5.5.5, 180.76.76.76]#CentOS [root@k8s-master01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 NAME=eth0 BOOTPROTO=none ONBOOT=yes IPADDR=172.31.3.101 PREFIX=21 GATEWAY=172.31.0.2 DNS1=223.5.5.5 DNS2=180.76.76.76 #Ubuntu root@k8s-master01:~# cat /etc/netplan/01-netcfg.yaml network: version: 2 renderer: networkd ethernets: eth0: addresses: [172.31.3.101/21] gateway4: 172.31.0.2 nameservers: addresses: [223.5.5.5, 180.76.76.76]

2.2 设置主机名

各节点设置主机名:

hostnamectl set-hostname k8s-master01.example.local hostnamectl set-hostname k8s-master02.example.local hostnamectl set-hostname k8s-master03.example.local hostnamectl set-hostname k8s-ha01.example.local hostnamectl set-hostname k8s-ha02.example.local hostnamectl set-hostname k8s-harbor01.example.local hostnamectl set-hostname k8s-harbor02.example.local hostnamectl set-hostname k8s-etcd01.example.local hostnamectl set-hostname k8s-etcd02.example.local hostnamectl set-hostname k8s-etcd03.example.local hostnamectl set-hostname k8s-node01.example.local hostnamectl set-hostname k8s-node02.example.local hostnamectl set-hostname k8s-node03.example.localhostnamectl set-hostname k8s-master01.example.local hostnamectl set-hostname k8s-master02.example.local hostnamectl set-hostname k8s-master03.example.local hostnamectl set-hostname k8s-ha01.example.local hostnamectl set-hostname k8s-ha02.example.local hostnamectl set-hostname k8s-harbor01.example.local hostnamectl set-hostname k8s-harbor02.example.local hostnamectl set-hostname k8s-etcd01.example.local hostnamectl set-hostname k8s-etcd02.example.local hostnamectl set-hostname k8s-etcd03.example.local hostnamectl set-hostname k8s-node01.example.local hostnamectl set-hostname k8s-node02.example.local hostnamectl set-hostname k8s-node03.example.localhostnamectl set-hostname k8s-master01.example.local hostnamectl set-hostname k8s-master02.example.local hostnamectl set-hostname k8s-master03.example.local hostnamectl set-hostname k8s-ha01.example.local hostnamectl set-hostname k8s-ha02.example.local hostnamectl set-hostname k8s-harbor01.example.local hostnamectl set-hostname k8s-harbor02.example.local hostnamectl set-hostname k8s-etcd01.example.local hostnamectl set-hostname k8s-etcd02.example.local hostnamectl set-hostname k8s-etcd03.example.local hostnamectl set-hostname k8s-node01.example.local hostnamectl set-hostname k8s-node02.example.local hostnamectl set-hostname k8s-node03.example.local

2.3 配置镜像源

CentOS 7所有节点配置 yum源如下:

rm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [base] name=base baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/os/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [extras] name=extras baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/extras/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [updates] name=updates baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/updates/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [centosplus] name=centosplus baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/centosplus/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [epel] name=epel baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOFrm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [base] name=base baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/os/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [extras] name=extras baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/extras/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [updates] name=updates baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/updates/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [centosplus] name=centosplus baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/centosplus/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [epel] name=epel baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOFrm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [base] name=base baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/os/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [extras] name=extras baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/extras/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [updates] name=updates baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/updates/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [centosplus] name=centosplus baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/centosplus/\$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever [epel] name=epel baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOF

Rocky 8所有节点配置 yum源如下:

rm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [BaseOS] name=BaseOS baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/BaseOS/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [AppStream] name=AppStream baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/AppStream/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [extras] name=extras baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/extras/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [plus] name=plus baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/plus/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [epel] name=epel baseurl=https://mirrors.aliyun.com/epel/\$releasever/Everything/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.aliyun.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOFrm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [BaseOS] name=BaseOS baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/BaseOS/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [AppStream] name=AppStream baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/AppStream/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [extras] name=extras baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/extras/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [plus] name=plus baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/plus/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [epel] name=epel baseurl=https://mirrors.aliyun.com/epel/\$releasever/Everything/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.aliyun.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOFrm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [BaseOS] name=BaseOS baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/BaseOS/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [AppStream] name=AppStream baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/AppStream/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [extras] name=extras baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/extras/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [plus] name=plus baseurl=https://mirrors.aliyun.com/rockylinux/\$releasever/plus/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial [epel] name=epel baseurl=https://mirrors.aliyun.com/epel/\$releasever/Everything/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.aliyun.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOF

CentOS stream 8所有节点配置 yum源如下:

rm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [BaseOS] name=BaseOS baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/BaseOS/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [AppStream] name=AppStream baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/AppStream/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [extras] name=extras baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/extras/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [centosplus] name=centosplus baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/centosplus/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [epel] name=epel baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/Everything/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOFrm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [BaseOS] name=BaseOS baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/BaseOS/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [AppStream] name=AppStream baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/AppStream/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [extras] name=extras baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/extras/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [centosplus] name=centosplus baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/centosplus/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [epel] name=epel baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/Everything/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOFrm -f /etc/yum.repos.d/*.repo cat > /etc/yum.repos.d/base.repo <<EOF [BaseOS] name=BaseOS baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/BaseOS/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [AppStream] name=AppStream baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/AppStream/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [extras] name=extras baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/extras/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [centosplus] name=centosplus baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever-stream/centosplus/\$basearch/os/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial [epel] name=epel baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/Everything/\$basearch/ gpgcheck=1 gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever EOF

Ubuntu 所有节点配置 apt源如下:

cat > /etc/apt/sources.list <<EOF deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs) main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs) main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-security main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-security main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-updates main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-updates main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-proposed main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-proposed main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-backports main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-backports main restricted universe multiverse EOF apt updatecat > /etc/apt/sources.list <<EOF deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs) main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs) main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-security main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-security main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-updates main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-updates main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-proposed main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-proposed main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-backports main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-backports main restricted universe multiverse EOF apt updatecat > /etc/apt/sources.list <<EOF deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs) main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs) main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-security main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-security main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-updates main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-updates main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-proposed main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-proposed main restricted universe multiverse deb http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-backports main restricted universe multiverse deb-src http://mirrors.cloud.tencent.com/ubuntu/ $(lsb_release -cs)-backports main restricted universe multiverse EOF apt update

2.4 必备工具安装

#CentOS安装 yum -y install vim tree lrzsz wget jq psmisc net-tools telnet yum-utils device-mapper-persistent-data lvm2 git #Rocky除了安装上面工具,还需要安装rsync yum -y install rsync #Ubuntu安装 apt -y install tree lrzsz jq#CentOS安装 yum -y install vim tree lrzsz wget jq psmisc net-tools telnet yum-utils device-mapper-persistent-data lvm2 git #Rocky除了安装上面工具,还需要安装rsync yum -y install rsync #Ubuntu安装 apt -y install tree lrzsz jq#CentOS安装 yum -y install vim tree lrzsz wget jq psmisc net-tools telnet yum-utils device-mapper-persistent-data lvm2 git #Rocky除了安装上面工具,还需要安装rsync yum -y install rsync #Ubuntu安装 apt -y install tree lrzsz jq

2.5 配置 ssh key 验证

配置 ssh key 验证,方便后续同步文件

Master01节点免密钥登录其他节点,安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作,阿里云或者AWS上需要单独一台kubectl服务器。密钥配置如下:

[root@k8s-master01 ~]# cat ssh_key.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-12-20 #FileName: ssh_key.sh #Description: ssh_key for CentOS 7/8 & Ubuntu 18.04/24.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' NET_NAME=`ip addr |awk -F"[: ]" '/^2: e.*/{print $3}'` IP=`ip addr show ${NET_NAME}| awk -F" +|/" '/global/{print $3}'` export SSHPASS=123456 HOSTS=" 172.31.3.102 172.31.3.103 172.31.3.104 172.31.3.105 172.31.3.106 172.31.3.107 172.31.3.108 172.31.3.109 172.31.3.110 172.31.3.111 172.31.3.112 172.31.3.113" os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } ssh_key_push(){ rm -f ~/.ssh/id_rsa* ssh-keygen -f /root/.ssh/id_rsa -P '' &> /dev/null if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then rpm -q sshpass &> /dev/null || { ${COLOR}"安装sshpass软件包"${END};yum -y install sshpass &> /dev/null; } else dpkg -S sshpass &> /dev/null || { ${COLOR}"安装sshpass软件包"${END};apt -y install sshpass &> /dev/null; } fi sshpass -e ssh-copy-id -o StrictHostKeyChecking=no ${IP} &> /dev/null [ $? -eq 0 ] && echo ${IP} is finished || echo ${IP} is false for i in ${HOSTS};do sshpass -e scp -o StrictHostKeyChecking=no -r /root/.ssh root@${i}: &> /dev/null [ $? -eq 0 ] && echo ${i} is finished || echo ${i} is false done for i in ${HOSTS};do scp /root/.ssh/known_hosts ${i}:.ssh/ &> /dev/null [ $? -eq 0 ] && echo ${i} is finished || echo ${i} is false done } main(){ os ssh_key_push } main [root@k8s-master01 ~]# bash ssh_key.sh 安装sshpass软件包 172.31.3.101 is finished 172.31.3.102 is finished 172.31.3.103 is finished 172.31.3.104 is finished 172.31.3.105 is finished 172.31.3.106 is finished 172.31.3.107 is finished 172.31.3.108 is finished 172.31.3.109 is finished 172.31.3.110 is finished 172.31.3.111 is finished 172.31.3.112 is finished 172.31.3.113 is finished 172.31.3.102 is finished 172.31.3.103 is finished 172.31.3.104 is finished 172.31.3.105 is finished 172.31.3.106 is finished 172.31.3.107 is finished 172.31.3.108 is finished 172.31.3.109 is finished 172.31.3.110 is finished 172.31.3.111 is finished 172.31.3.112 is finished 172.31.3.113 is finished[root@k8s-master01 ~]# cat ssh_key.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-12-20 #FileName: ssh_key.sh #Description: ssh_key for CentOS 7/8 & Ubuntu 18.04/24.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' NET_NAME=`ip addr |awk -F"[: ]" '/^2: e.*/{print $3}'` IP=`ip addr show ${NET_NAME}| awk -F" +|/" '/global/{print $3}'` export SSHPASS=123456 HOSTS=" 172.31.3.102 172.31.3.103 172.31.3.104 172.31.3.105 172.31.3.106 172.31.3.107 172.31.3.108 172.31.3.109 172.31.3.110 172.31.3.111 172.31.3.112 172.31.3.113" os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } ssh_key_push(){ rm -f ~/.ssh/id_rsa* ssh-keygen -f /root/.ssh/id_rsa -P '' &> /dev/null if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then rpm -q sshpass &> /dev/null || { ${COLOR}"安装sshpass软件包"${END};yum -y install sshpass &> /dev/null; } else dpkg -S sshpass &> /dev/null || { ${COLOR}"安装sshpass软件包"${END};apt -y install sshpass &> /dev/null; } fi sshpass -e ssh-copy-id -o StrictHostKeyChecking=no ${IP} &> /dev/null [ $? -eq 0 ] && echo ${IP} is finished || echo ${IP} is false for i in ${HOSTS};do sshpass -e scp -o StrictHostKeyChecking=no -r /root/.ssh root@${i}: &> /dev/null [ $? -eq 0 ] && echo ${i} is finished || echo ${i} is false done for i in ${HOSTS};do scp /root/.ssh/known_hosts ${i}:.ssh/ &> /dev/null [ $? -eq 0 ] && echo ${i} is finished || echo ${i} is false done } main(){ os ssh_key_push } main [root@k8s-master01 ~]# bash ssh_key.sh 安装sshpass软件包 172.31.3.101 is finished 172.31.3.102 is finished 172.31.3.103 is finished 172.31.3.104 is finished 172.31.3.105 is finished 172.31.3.106 is finished 172.31.3.107 is finished 172.31.3.108 is finished 172.31.3.109 is finished 172.31.3.110 is finished 172.31.3.111 is finished 172.31.3.112 is finished 172.31.3.113 is finished 172.31.3.102 is finished 172.31.3.103 is finished 172.31.3.104 is finished 172.31.3.105 is finished 172.31.3.106 is finished 172.31.3.107 is finished 172.31.3.108 is finished 172.31.3.109 is finished 172.31.3.110 is finished 172.31.3.111 is finished 172.31.3.112 is finished 172.31.3.113 is finished[root@k8s-master01 ~]# cat ssh_key.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-12-20 #FileName: ssh_key.sh #Description: ssh_key for CentOS 7/8 & Ubuntu 18.04/24.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' NET_NAME=`ip addr |awk -F"[: ]" '/^2: e.*/{print $3}'` IP=`ip addr show ${NET_NAME}| awk -F" +|/" '/global/{print $3}'` export SSHPASS=123456 HOSTS=" 172.31.3.102 172.31.3.103 172.31.3.104 172.31.3.105 172.31.3.106 172.31.3.107 172.31.3.108 172.31.3.109 172.31.3.110 172.31.3.111 172.31.3.112 172.31.3.113" os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } ssh_key_push(){ rm -f ~/.ssh/id_rsa* ssh-keygen -f /root/.ssh/id_rsa -P '' &> /dev/null if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then rpm -q sshpass &> /dev/null || { ${COLOR}"安装sshpass软件包"${END};yum -y install sshpass &> /dev/null; } else dpkg -S sshpass &> /dev/null || { ${COLOR}"安装sshpass软件包"${END};apt -y install sshpass &> /dev/null; } fi sshpass -e ssh-copy-id -o StrictHostKeyChecking=no ${IP} &> /dev/null [ $? -eq 0 ] && echo ${IP} is finished || echo ${IP} is false for i in ${HOSTS};do sshpass -e scp -o StrictHostKeyChecking=no -r /root/.ssh root@${i}: &> /dev/null [ $? -eq 0 ] && echo ${i} is finished || echo ${i} is false done for i in ${HOSTS};do scp /root/.ssh/known_hosts ${i}:.ssh/ &> /dev/null [ $? -eq 0 ] && echo ${i} is finished || echo ${i} is false done } main(){ os ssh_key_push } main [root@k8s-master01 ~]# bash ssh_key.sh 安装sshpass软件包 172.31.3.101 is finished 172.31.3.102 is finished 172.31.3.103 is finished 172.31.3.104 is finished 172.31.3.105 is finished 172.31.3.106 is finished 172.31.3.107 is finished 172.31.3.108 is finished 172.31.3.109 is finished 172.31.3.110 is finished 172.31.3.111 is finished 172.31.3.112 is finished 172.31.3.113 is finished 172.31.3.102 is finished 172.31.3.103 is finished 172.31.3.104 is finished 172.31.3.105 is finished 172.31.3.106 is finished 172.31.3.107 is finished 172.31.3.108 is finished 172.31.3.109 is finished 172.31.3.110 is finished 172.31.3.111 is finished 172.31.3.112 is finished 172.31.3.113 is finished

2.6 设置域名解析

所有节点配置hosts,修改/etc/hosts如下:

cat >> /etc/hosts <<EOF 172.31.3.101 k8s-master01.example.local k8s-master01 172.31.3.102 k8s-master02.example.local k8s-master02 172.31.3.103 k8s-master03.example.local k8s-master03 172.31.3.104 k8s-ha01.example.local k8s-ha01 172.31.3.105 k8s-ha02.example.local k8s-ha02 172.31.3.106 k8s-harbor01.example.local k8s-harbor01 172.31.3.107 k8s-harbor02.example.local k8s-harbor02 172.31.3.108 k8s-etcd01.example.local k8s-etcd01 172.31.3.109 k8s-etcd02.example.local k8s-etcd02 172.31.3.110 k8s-etcd03.example.local k8s-etcd03 172.31.3.111 k8s-node01.example.local k8s-node01 172.31.3.112 k8s-node02.example.local k8s-node02 172.31.3.113 k8s-node03.example.local k8s-node03 172.31.3.188 kubeapi.raymonds.cc kubeapi 172.31.3.188 harbor.raymonds.cc harbor EOF for i in {102..113};do scp /etc/hosts 172.31.3.$i:/etc/ ;donecat >> /etc/hosts <<EOF 172.31.3.101 k8s-master01.example.local k8s-master01 172.31.3.102 k8s-master02.example.local k8s-master02 172.31.3.103 k8s-master03.example.local k8s-master03 172.31.3.104 k8s-ha01.example.local k8s-ha01 172.31.3.105 k8s-ha02.example.local k8s-ha02 172.31.3.106 k8s-harbor01.example.local k8s-harbor01 172.31.3.107 k8s-harbor02.example.local k8s-harbor02 172.31.3.108 k8s-etcd01.example.local k8s-etcd01 172.31.3.109 k8s-etcd02.example.local k8s-etcd02 172.31.3.110 k8s-etcd03.example.local k8s-etcd03 172.31.3.111 k8s-node01.example.local k8s-node01 172.31.3.112 k8s-node02.example.local k8s-node02 172.31.3.113 k8s-node03.example.local k8s-node03 172.31.3.188 kubeapi.raymonds.cc kubeapi 172.31.3.188 harbor.raymonds.cc harbor EOF for i in {102..113};do scp /etc/hosts 172.31.3.$i:/etc/ ;donecat >> /etc/hosts <<EOF 172.31.3.101 k8s-master01.example.local k8s-master01 172.31.3.102 k8s-master02.example.local k8s-master02 172.31.3.103 k8s-master03.example.local k8s-master03 172.31.3.104 k8s-ha01.example.local k8s-ha01 172.31.3.105 k8s-ha02.example.local k8s-ha02 172.31.3.106 k8s-harbor01.example.local k8s-harbor01 172.31.3.107 k8s-harbor02.example.local k8s-harbor02 172.31.3.108 k8s-etcd01.example.local k8s-etcd01 172.31.3.109 k8s-etcd02.example.local k8s-etcd02 172.31.3.110 k8s-etcd03.example.local k8s-etcd03 172.31.3.111 k8s-node01.example.local k8s-node01 172.31.3.112 k8s-node02.example.local k8s-node02 172.31.3.113 k8s-node03.example.local k8s-node03 172.31.3.188 kubeapi.raymonds.cc kubeapi 172.31.3.188 harbor.raymonds.cc harbor EOF for i in {102..113};do scp /etc/hosts 172.31.3.$i:/etc/ ;done

2.7 关闭防火墙

#CentOS systemctl disable --now firewalld #CentOS 7 systemctl disable --now NetworkManager #Ubuntu systemctl disable --now ufw#CentOS systemctl disable --now firewalld #CentOS 7 systemctl disable --now NetworkManager #Ubuntu systemctl disable --now ufw#CentOS systemctl disable --now firewalld #CentOS 7 systemctl disable --now NetworkManager #Ubuntu systemctl disable --now ufw

2.8 禁用SELinux

#CentOS setenforce 0 sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config #Ubuntu Ubuntu没有安装SELinux,不用设置#CentOS setenforce 0 sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config #Ubuntu Ubuntu没有安装SELinux,不用设置#CentOS setenforce 0 sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config #Ubuntu Ubuntu没有安装SELinux,不用设置

2.9 禁用swap

sed -ri 's/.*swap.*/#&/' /etc/fstab swapoff -a #Ubuntu 20.04,执行下面命令 sed -ri 's/.*swap.*/#&/' /etc/fstab SD_NAME=`lsblk|awk -F"[ └─]" '/SWAP/{printf $3}'` systemctl mask dev-${SD_NAME}.swap swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstab swapoff -a #Ubuntu 20.04,执行下面命令 sed -ri 's/.*swap.*/#&/' /etc/fstab SD_NAME=`lsblk|awk -F"[ └─]" '/SWAP/{printf $3}'` systemctl mask dev-${SD_NAME}.swap swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstab swapoff -a #Ubuntu 20.04,执行下面命令 sed -ri 's/.*swap.*/#&/' /etc/fstab SD_NAME=`lsblk|awk -F"[ └─]" '/SWAP/{printf $3}'` systemctl mask dev-${SD_NAME}.swap swapoff -a

2.10 时间同步

ha01和ha02上安装chrony-server:

[root@k8s-ha01 ~]# cat install_chrony_server.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-11-22 #FileName: install_chrony_server.sh #Description: install_chrony_server for CentOS 7/8 & Ubuntu 18.04/20.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } install_chrony(){ ${COLOR}"安装chrony软件包..."${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^server.*/d' -e '/^# Please consider .*/a\server ntp.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver ntp.tuna.tsinghua.edu.cn iburst' -e 's@^#allow.*@allow 0.0.0.0/0@' -e 's@^#local.*@local stratum 10@' /etc/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} else apt -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^# See http:.*/a\server ntp.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver ntp.tuna.tsinghua.edu.cn iburst' /etc/chrony/chrony.conf echo "allow 0.0.0.0/0" >> /etc/chrony/chrony.conf echo "local stratum 10" >> /etc/chrony/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} fi } main(){ os install_chrony } main [root@k8s-ha01 ~]# bash install_chrony_server.sh chrony安装完成 [root@k8s-ha02 ~]# bash install_chrony_server.sh chrony安装完成 [root@k8s-ha01 ~]# chronyc sources -nv 210 Number of sources = 3 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 39 -1507us[-8009us] +/- 37ms ^- 139.199.215.251 2 6 17 39 +10ms[ +10ms] +/- 48ms ^? 101.6.6.172 0 7 0 - +0ns[ +0ns] +/- 0ns [root@k8s-ha02 ~]# chronyc sources -nv 210 Number of sources = 3 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 40 +90us[-1017ms] +/- 32ms ^+ 139.199.215.251 2 6 33 37 +13ms[ +13ms] +/- 25ms ^? 101.6.6.172 0 7 0 - +0ns[ +0ns] +/- 0ns[root@k8s-ha01 ~]# cat install_chrony_server.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-11-22 #FileName: install_chrony_server.sh #Description: install_chrony_server for CentOS 7/8 & Ubuntu 18.04/20.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } install_chrony(){ ${COLOR}"安装chrony软件包..."${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^server.*/d' -e '/^# Please consider .*/a\server ntp.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver ntp.tuna.tsinghua.edu.cn iburst' -e 's@^#allow.*@allow 0.0.0.0/0@' -e 's@^#local.*@local stratum 10@' /etc/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} else apt -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^# See http:.*/a\server ntp.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver ntp.tuna.tsinghua.edu.cn iburst' /etc/chrony/chrony.conf echo "allow 0.0.0.0/0" >> /etc/chrony/chrony.conf echo "local stratum 10" >> /etc/chrony/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} fi } main(){ os install_chrony } main [root@k8s-ha01 ~]# bash install_chrony_server.sh chrony安装完成 [root@k8s-ha02 ~]# bash install_chrony_server.sh chrony安装完成 [root@k8s-ha01 ~]# chronyc sources -nv 210 Number of sources = 3 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 39 -1507us[-8009us] +/- 37ms ^- 139.199.215.251 2 6 17 39 +10ms[ +10ms] +/- 48ms ^? 101.6.6.172 0 7 0 - +0ns[ +0ns] +/- 0ns [root@k8s-ha02 ~]# chronyc sources -nv 210 Number of sources = 3 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 40 +90us[-1017ms] +/- 32ms ^+ 139.199.215.251 2 6 33 37 +13ms[ +13ms] +/- 25ms ^? 101.6.6.172 0 7 0 - +0ns[ +0ns] +/- 0ns[root@k8s-ha01 ~]# cat install_chrony_server.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-11-22 #FileName: install_chrony_server.sh #Description: install_chrony_server for CentOS 7/8 & Ubuntu 18.04/20.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } install_chrony(){ ${COLOR}"安装chrony软件包..."${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^server.*/d' -e '/^# Please consider .*/a\server ntp.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver ntp.tuna.tsinghua.edu.cn iburst' -e 's@^#allow.*@allow 0.0.0.0/0@' -e 's@^#local.*@local stratum 10@' /etc/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} else apt -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^# See http:.*/a\server ntp.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver ntp.tuna.tsinghua.edu.cn iburst' /etc/chrony/chrony.conf echo "allow 0.0.0.0/0" >> /etc/chrony/chrony.conf echo "local stratum 10" >> /etc/chrony/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} fi } main(){ os install_chrony } main [root@k8s-ha01 ~]# bash install_chrony_server.sh chrony安装完成 [root@k8s-ha02 ~]# bash install_chrony_server.sh chrony安装完成 [root@k8s-ha01 ~]# chronyc sources -nv 210 Number of sources = 3 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 39 -1507us[-8009us] +/- 37ms ^- 139.199.215.251 2 6 17 39 +10ms[ +10ms] +/- 48ms ^? 101.6.6.172 0 7 0 - +0ns[ +0ns] +/- 0ns [root@k8s-ha02 ~]# chronyc sources -nv 210 Number of sources = 3 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 40 +90us[-1017ms] +/- 32ms ^+ 139.199.215.251 2 6 33 37 +13ms[ +13ms] +/- 25ms ^? 101.6.6.172 0 7 0 - +0ns[ +0ns] +/- 0ns

master、node、etcd、harbor上安装chrony-client:

[root@k8s-master01 ~]# cat install_chrony_client.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-11-22 #FileName: install_chrony_client.sh #Description: install_chrony_client for CentOS 7/8 & Ubuntu 18.04/20.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' SERVER1=172.31.3.104 SERVER2=172.31.3.105 os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } install_chrony(){ ${COLOR}"安装chrony软件包..."${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^server.*/d' -e '/^# Please consider .*/a\server '${SERVER1}' iburst\nserver '${SERVER2}' iburst' /etc/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} else apt -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^# See http:.*/a\server '${SERVER1}' iburst\nserver '${SERVER2}' iburst' /etc/chrony/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } systemctl restart chronyd ${COLOR}"chrony安装完成"${END} fi } main(){ os install_chrony } main [root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-harbor01 k8s-harbor02 k8s-etcd01 k8s-etcd02 k8s-etcd03 k8s-node01 k8s-node02 k8s-node03;do scp -o StrictHostKeyChecking=no install_chrony_client.sh $i:/root/ ; done [root@k8s-master01 ~]# bash install_chrony_client.sh [root@k8s-master02 ~]# bash install_chrony_client.sh [root@k8s-master03 ~]# bash install_chrony_client.sh [root@k8s-harbor01:~]# bash install_chrony_client.sh [root@k8s-harbor02:~]# bash install_chrony_client.sh [root@k8s-etcd01:~]# bash install_chrony_client.sh [root@k8s-etcd02:~]# bash install_chrony_client.sh [root@k8s-etcd03:~]# bash install_chrony_client.sh [root@k8s-node01:~]# bash install_chrony_client.sh [root@k8s-node02:~]# bash install_chrony_client.sh [root@k8s-node03:~]# bash install_chrony_client.sh [root@k8s-master01 ~]# chronyc sources -nv MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* k8s-ha01.example.local 3 6 37 51 +13us[-1091us] +/- 38ms ^+ k8s-ha02.example.local 3 6 37 51 -14us[-1117us] +/- 36ms[root@k8s-master01 ~]# cat install_chrony_client.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-11-22 #FileName: install_chrony_client.sh #Description: install_chrony_client for CentOS 7/8 & Ubuntu 18.04/20.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' SERVER1=172.31.3.104 SERVER2=172.31.3.105 os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } install_chrony(){ ${COLOR}"安装chrony软件包..."${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^server.*/d' -e '/^# Please consider .*/a\server '${SERVER1}' iburst\nserver '${SERVER2}' iburst' /etc/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} else apt -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^# See http:.*/a\server '${SERVER1}' iburst\nserver '${SERVER2}' iburst' /etc/chrony/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } systemctl restart chronyd ${COLOR}"chrony安装完成"${END} fi } main(){ os install_chrony } main [root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-harbor01 k8s-harbor02 k8s-etcd01 k8s-etcd02 k8s-etcd03 k8s-node01 k8s-node02 k8s-node03;do scp -o StrictHostKeyChecking=no install_chrony_client.sh $i:/root/ ; done [root@k8s-master01 ~]# bash install_chrony_client.sh [root@k8s-master02 ~]# bash install_chrony_client.sh [root@k8s-master03 ~]# bash install_chrony_client.sh [root@k8s-harbor01:~]# bash install_chrony_client.sh [root@k8s-harbor02:~]# bash install_chrony_client.sh [root@k8s-etcd01:~]# bash install_chrony_client.sh [root@k8s-etcd02:~]# bash install_chrony_client.sh [root@k8s-etcd03:~]# bash install_chrony_client.sh [root@k8s-node01:~]# bash install_chrony_client.sh [root@k8s-node02:~]# bash install_chrony_client.sh [root@k8s-node03:~]# bash install_chrony_client.sh [root@k8s-master01 ~]# chronyc sources -nv MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* k8s-ha01.example.local 3 6 37 51 +13us[-1091us] +/- 38ms ^+ k8s-ha02.example.local 3 6 37 51 -14us[-1117us] +/- 36ms[root@k8s-master01 ~]# cat install_chrony_client.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2021-11-22 #FileName: install_chrony_client.sh #Description: install_chrony_client for CentOS 7/8 & Ubuntu 18.04/20.04 & Rocky 8 #Copyright (C): 2021 All rights reserved #********************************************************************************************* COLOR="echo -e \\033[01;31m" END='\033[0m' SERVER1=172.31.3.104 SERVER2=172.31.3.105 os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } install_chrony(){ ${COLOR}"安装chrony软件包..."${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^server.*/d' -e '/^# Please consider .*/a\server '${SERVER1}' iburst\nserver '${SERVER2}' iburst' /etc/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } ${COLOR}"chrony安装完成"${END} else apt -y install chrony &> /dev/null sed -i -e '/^pool.*/d' -e '/^# See http:.*/a\server '${SERVER1}' iburst\nserver '${SERVER2}' iburst' /etc/chrony/chrony.conf systemctl enable --now chronyd &> /dev/null systemctl is-active chronyd &> /dev/null || { ${COLOR}"chrony 启动失败,退出!"${END} ; exit; } systemctl restart chronyd ${COLOR}"chrony安装完成"${END} fi } main(){ os install_chrony } main [root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-harbor01 k8s-harbor02 k8s-etcd01 k8s-etcd02 k8s-etcd03 k8s-node01 k8s-node02 k8s-node03;do scp -o StrictHostKeyChecking=no install_chrony_client.sh $i:/root/ ; done [root@k8s-master01 ~]# bash install_chrony_client.sh [root@k8s-master02 ~]# bash install_chrony_client.sh [root@k8s-master03 ~]# bash install_chrony_client.sh [root@k8s-harbor01:~]# bash install_chrony_client.sh [root@k8s-harbor02:~]# bash install_chrony_client.sh [root@k8s-etcd01:~]# bash install_chrony_client.sh [root@k8s-etcd02:~]# bash install_chrony_client.sh [root@k8s-etcd03:~]# bash install_chrony_client.sh [root@k8s-node01:~]# bash install_chrony_client.sh [root@k8s-node02:~]# bash install_chrony_client.sh [root@k8s-node03:~]# bash install_chrony_client.sh [root@k8s-master01 ~]# chronyc sources -nv MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* k8s-ha01.example.local 3 6 37 51 +13us[-1091us] +/- 38ms ^+ k8s-ha02.example.local 3 6 37 51 -14us[-1117us] +/- 36ms

2.11 设置时区

所有节点设置时区配置如下:

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime echo 'Asia/Shanghai' >/etc/timezone #Ubuntu还要设置下面内容 cat >> /etc/default/locale <<-EOF LC_TIME=en_DK.UTF-8 EOFln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime echo 'Asia/Shanghai' >/etc/timezone #Ubuntu还要设置下面内容 cat >> /etc/default/locale <<-EOF LC_TIME=en_DK.UTF-8 EOFln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime echo 'Asia/Shanghai' >/etc/timezone #Ubuntu还要设置下面内容 cat >> /etc/default/locale <<-EOF LC_TIME=en_DK.UTF-8 EOF

2.12 优化资源限制参数

所有节点配置limit:

ulimit -SHn 65535 cat >>/etc/security/limits.conf <<EOF * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOFulimit -SHn 65535 cat >>/etc/security/limits.conf <<EOF * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOFulimit -SHn 65535 cat >>/etc/security/limits.conf <<EOF * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOF

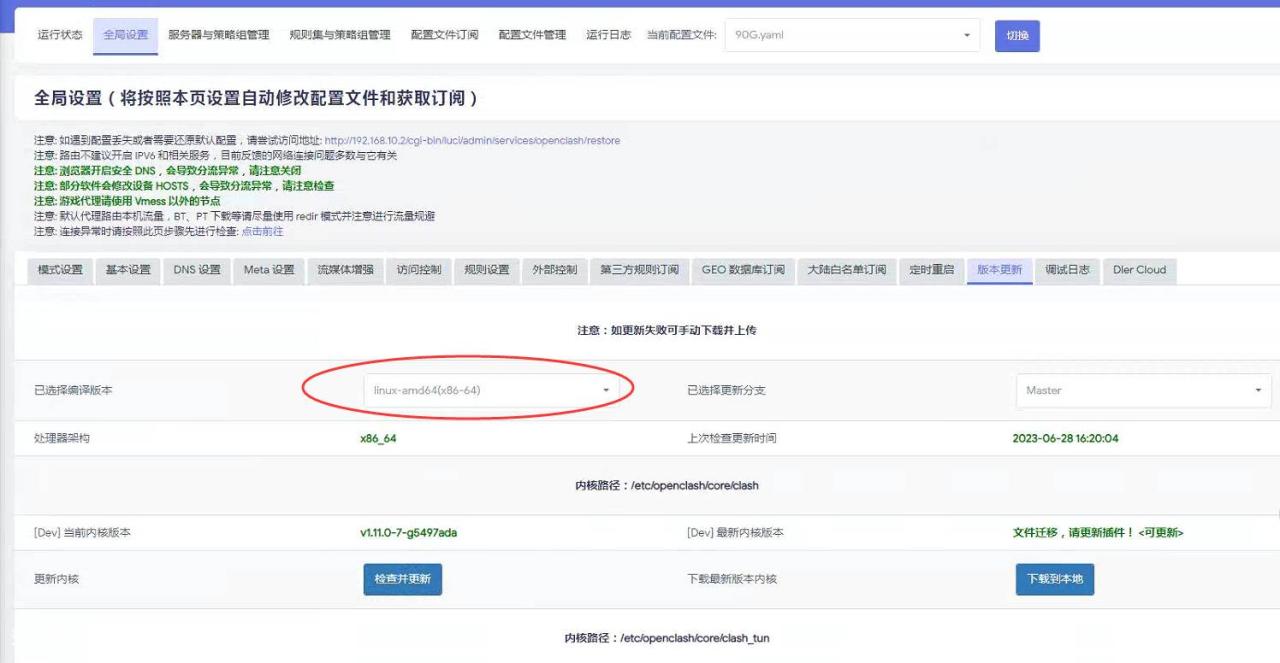

2.13 内核升级

CentOS7内核是3.10,kubernetes需要内核是4.18或以上版本,CentOS7必须升级内核才可以安装kubernetes,其它系统根据自己的需求去升级

CentOS7 需要升级内核至4.18+,本地升级的版本为4.19

在master01节点下载内核:

[root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm [root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm[root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm [root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm[root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm [root@k8s-master01 ~]# wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

从master01节点传到其他节点:

[root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done[root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done[root@k8s-master01 ~]# for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done

所有节点安装内核

cd /root && yum localinstall -y kernel-ml*cd /root && yum localinstall -y kernel-ml*cd /root && yum localinstall -y kernel-ml*

master和node节点更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

检查默认内核是不是4.19

grubby --default-kernel [root@k8s-master01 ~]# grubby --default-kernel /boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64grubby --default-kernel [root@k8s-master01 ~]# grubby --default-kernel /boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64grubby --default-kernel [root@k8s-master01 ~]# grubby --default-kernel /boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

所有节点重启,然后检查内核是不是4.19

reboot uname -a [root@k8s-master01 ~]# uname -a Linux k8s-master01 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linuxreboot uname -a [root@k8s-master01 ~]# uname -a Linux k8s-master01 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linuxreboot uname -a [root@k8s-master01 ~]# uname -a Linux k8s-master01 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux

2.14 安装ipvs相关工具并优化内核

master和node安装ipvsadm:

#CentOS yum -y install ipvsadm ipset sysstat conntrack libseccomp #Ubuntu apt -y install ipvsadm ipset sysstat conntrack libseccomp-dev#CentOS yum -y install ipvsadm ipset sysstat conntrack libseccomp #Ubuntu apt -y install ipvsadm ipset sysstat conntrack libseccomp-dev#CentOS yum -y install ipvsadm ipset sysstat conntrack libseccomp #Ubuntu apt -y install ipvsadm ipset sysstat conntrack libseccomp-dev

master和node节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可:

modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4 cat >> /etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4 ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF 然后执行systemctl restart systemd-modules-load.service即可modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4 cat >> /etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4 ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF 然后执行systemctl restart systemd-modules-load.service即可modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4 cat >> /etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4 ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF 然后执行systemctl restart systemd-modules-load.service即可

开启一些k8s集群中必须的内核参数,master和node节点配置k8s内核:

cat > /etc/sysctl.d/k8s.conf <<EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF sysctl --systemcat > /etc/sysctl.d/k8s.conf <<EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF sysctl --systemcat > /etc/sysctl.d/k8s.conf <<EOF net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF sysctl --system

Kubernetes内核优化常用参数详解:

net.ipv4.ip_forward = 1 #其值为0,说明禁止进行IP转发;如果是1,则说明IP转发功能已经打开。 net.bridge.bridge-nf-call-iptables = 1 #二层的网桥在转发包时也会被iptables的FORWARD规则所过滤,这样有时会出现L3层的iptables rules去过滤L2的帧的问题 net.bridge.bridge-nf-call-ip6tables = 1 #是否在ip6tables链中过滤IPv6包 fs.may_detach_mounts = 1 #当系统有容器运行时,需要设置为1 vm.overcommit_memory=1 #0, 表示内核将检查是否有足够的可用内存供应用进程使用;如果有足够的可用内存,内存申请允许;否则,内存申请失败,并把错误返回给应用进程。 #1, 表示内核允许分配所有的物理内存,而不管当前的内存状态如何。 #2, 表示内核允许分配超过所有物理内存和交换空间总和的内存 vm.panic_on_oom=0 #OOM就是out of memory的缩写,遇到内存耗尽、无法分配的状况。kernel面对OOM的时候,咱们也不能慌乱,要根据OOM参数来进行相应的处理。 #值为0:内存不足时,启动 OOM killer。 #值为1:内存不足时,有可能会触发 kernel panic(系统重启),也有可能启动 OOM killer。 #值为2:内存不足时,表示强制触发 kernel panic,内核崩溃GG(系统重启)。 fs.inotify.max_user_watches=89100 #表示同一用户同时可以添加的watch数目(watch一般是针对目录,决定了同时同一用户可以监控的目录数量) fs.file-max=52706963 #所有进程最大的文件数 fs.nr_open=52706963 #单个进程可分配的最大文件数 net.netfilter.nf_conntrack_max=2310720 #连接跟踪表的大小,建议根据内存计算该值CONNTRACK_MAX = RAMSIZE (in bytes) / 16384 / (x / 32),并满足nf_conntrack_max=4*nf_conntrack_buckets,默认262144 net.ipv4.tcp_keepalive_time = 600 #KeepAlive的空闲时长,或者说每次正常发送心跳的周期,默认值为7200s(2小时) net.ipv4.tcp_keepalive_probes = 3 #在tcp_keepalive_time之后,没有接收到对方确认,继续发送保活探测包次数,默认值为9(次) net.ipv4.tcp_keepalive_intvl =15 #KeepAlive探测包的发送间隔,默认值为75s net.ipv4.tcp_max_tw_buckets = 36000 #Nginx 之类的中间代理一定要关注这个值,因为它对你的系统起到一个保护的作用,一旦端口全部被占用,服务就异常了。 tcp_max_tw_buckets 能帮你降低这种情况的发生概率,争取补救时间。 net.ipv4.tcp_tw_reuse = 1 #只对客户端起作用,开启后客户端在1s内回收 net.ipv4.tcp_max_orphans = 327680 #这个值表示系统所能处理不属于任何进程的socket数量,当我们需要快速建立大量连接时,就需要关注下这个值了。 net.ipv4.tcp_orphan_retries = 3 #出现大量fin-wait-1 #首先,fin发送之后,有可能会丢弃,那么发送多少次这样的fin包呢?fin包的重传,也会采用退避方式,在2.6.358内核中采用的是指数退避,2s,4s,最后的重试次数是由tcp_orphan_retries来限制的。 net.ipv4.tcp_syncookies = 1 #tcp_syncookies是一个开关,是否打开SYN Cookie功能,该功能可以防止部分SYN攻击。tcp_synack_retries和tcp_syn_retries定义SYN的重试次数。 net.ipv4.tcp_max_syn_backlog = 16384 #进入SYN包的最大请求队列.默认1024.对重负载服务器,增加该值显然有好处. net.ipv4.ip_conntrack_max = 65536 #表明系统将对最大跟踪的TCP连接数限制默认为65536 net.ipv4.tcp_max_syn_backlog = 16384 #指定所能接受SYN同步包的最大客户端数量,即半连接上限; net.ipv4.tcp_timestamps = 0 #在使用 iptables 做 nat 时,发现内网机器 ping 某个域名 ping 的通,而使用 curl 测试不通, 原来是 net.ipv4.tcp_timestamps 设置了为 1 ,即启用时间戳 net.core.somaxconn = 16384 #Linux中的一个kernel参数,表示socket监听(listen)的backlog上限。什么是backlog呢?backlog就是socket的监听队列,当一个请求(request)尚未被处理或建立时,他会进入backlog。而socket server可以一次性处理backlog中的所有请求,处理后的请求不再位于监听队列中。当server处理请求较慢,以至于监听队列被填满后,新来的请求会被拒绝。net.ipv4.ip_forward = 1 #其值为0,说明禁止进行IP转发;如果是1,则说明IP转发功能已经打开。 net.bridge.bridge-nf-call-iptables = 1 #二层的网桥在转发包时也会被iptables的FORWARD规则所过滤,这样有时会出现L3层的iptables rules去过滤L2的帧的问题 net.bridge.bridge-nf-call-ip6tables = 1 #是否在ip6tables链中过滤IPv6包 fs.may_detach_mounts = 1 #当系统有容器运行时,需要设置为1 vm.overcommit_memory=1 #0, 表示内核将检查是否有足够的可用内存供应用进程使用;如果有足够的可用内存,内存申请允许;否则,内存申请失败,并把错误返回给应用进程。 #1, 表示内核允许分配所有的物理内存,而不管当前的内存状态如何。 #2, 表示内核允许分配超过所有物理内存和交换空间总和的内存 vm.panic_on_oom=0 #OOM就是out of memory的缩写,遇到内存耗尽、无法分配的状况。kernel面对OOM的时候,咱们也不能慌乱,要根据OOM参数来进行相应的处理。 #值为0:内存不足时,启动 OOM killer。 #值为1:内存不足时,有可能会触发 kernel panic(系统重启),也有可能启动 OOM killer。 #值为2:内存不足时,表示强制触发 kernel panic,内核崩溃GG(系统重启)。 fs.inotify.max_user_watches=89100 #表示同一用户同时可以添加的watch数目(watch一般是针对目录,决定了同时同一用户可以监控的目录数量) fs.file-max=52706963 #所有进程最大的文件数 fs.nr_open=52706963 #单个进程可分配的最大文件数 net.netfilter.nf_conntrack_max=2310720 #连接跟踪表的大小,建议根据内存计算该值CONNTRACK_MAX = RAMSIZE (in bytes) / 16384 / (x / 32),并满足nf_conntrack_max=4*nf_conntrack_buckets,默认262144 net.ipv4.tcp_keepalive_time = 600 #KeepAlive的空闲时长,或者说每次正常发送心跳的周期,默认值为7200s(2小时) net.ipv4.tcp_keepalive_probes = 3 #在tcp_keepalive_time之后,没有接收到对方确认,继续发送保活探测包次数,默认值为9(次) net.ipv4.tcp_keepalive_intvl =15 #KeepAlive探测包的发送间隔,默认值为75s net.ipv4.tcp_max_tw_buckets = 36000 #Nginx 之类的中间代理一定要关注这个值,因为它对你的系统起到一个保护的作用,一旦端口全部被占用,服务就异常了。 tcp_max_tw_buckets 能帮你降低这种情况的发生概率,争取补救时间。 net.ipv4.tcp_tw_reuse = 1 #只对客户端起作用,开启后客户端在1s内回收 net.ipv4.tcp_max_orphans = 327680 #这个值表示系统所能处理不属于任何进程的socket数量,当我们需要快速建立大量连接时,就需要关注下这个值了。 net.ipv4.tcp_orphan_retries = 3 #出现大量fin-wait-1 #首先,fin发送之后,有可能会丢弃,那么发送多少次这样的fin包呢?fin包的重传,也会采用退避方式,在2.6.358内核中采用的是指数退避,2s,4s,最后的重试次数是由tcp_orphan_retries来限制的。 net.ipv4.tcp_syncookies = 1 #tcp_syncookies是一个开关,是否打开SYN Cookie功能,该功能可以防止部分SYN攻击。tcp_synack_retries和tcp_syn_retries定义SYN的重试次数。 net.ipv4.tcp_max_syn_backlog = 16384 #进入SYN包的最大请求队列.默认1024.对重负载服务器,增加该值显然有好处. net.ipv4.ip_conntrack_max = 65536 #表明系统将对最大跟踪的TCP连接数限制默认为65536 net.ipv4.tcp_max_syn_backlog = 16384 #指定所能接受SYN同步包的最大客户端数量,即半连接上限; net.ipv4.tcp_timestamps = 0 #在使用 iptables 做 nat 时,发现内网机器 ping 某个域名 ping 的通,而使用 curl 测试不通, 原来是 net.ipv4.tcp_timestamps 设置了为 1 ,即启用时间戳 net.core.somaxconn = 16384 #Linux中的一个kernel参数,表示socket监听(listen)的backlog上限。什么是backlog呢?backlog就是socket的监听队列,当一个请求(request)尚未被处理或建立时,他会进入backlog。而socket server可以一次性处理backlog中的所有请求,处理后的请求不再位于监听队列中。当server处理请求较慢,以至于监听队列被填满后,新来的请求会被拒绝。net.ipv4.ip_forward = 1 #其值为0,说明禁止进行IP转发;如果是1,则说明IP转发功能已经打开。 net.bridge.bridge-nf-call-iptables = 1 #二层的网桥在转发包时也会被iptables的FORWARD规则所过滤,这样有时会出现L3层的iptables rules去过滤L2的帧的问题 net.bridge.bridge-nf-call-ip6tables = 1 #是否在ip6tables链中过滤IPv6包 fs.may_detach_mounts = 1 #当系统有容器运行时,需要设置为1 vm.overcommit_memory=1 #0, 表示内核将检查是否有足够的可用内存供应用进程使用;如果有足够的可用内存,内存申请允许;否则,内存申请失败,并把错误返回给应用进程。 #1, 表示内核允许分配所有的物理内存,而不管当前的内存状态如何。 #2, 表示内核允许分配超过所有物理内存和交换空间总和的内存 vm.panic_on_oom=0 #OOM就是out of memory的缩写,遇到内存耗尽、无法分配的状况。kernel面对OOM的时候,咱们也不能慌乱,要根据OOM参数来进行相应的处理。 #值为0:内存不足时,启动 OOM killer。 #值为1:内存不足时,有可能会触发 kernel panic(系统重启),也有可能启动 OOM killer。 #值为2:内存不足时,表示强制触发 kernel panic,内核崩溃GG(系统重启)。 fs.inotify.max_user_watches=89100 #表示同一用户同时可以添加的watch数目(watch一般是针对目录,决定了同时同一用户可以监控的目录数量) fs.file-max=52706963 #所有进程最大的文件数 fs.nr_open=52706963 #单个进程可分配的最大文件数 net.netfilter.nf_conntrack_max=2310720 #连接跟踪表的大小,建议根据内存计算该值CONNTRACK_MAX = RAMSIZE (in bytes) / 16384 / (x / 32),并满足nf_conntrack_max=4*nf_conntrack_buckets,默认262144 net.ipv4.tcp_keepalive_time = 600 #KeepAlive的空闲时长,或者说每次正常发送心跳的周期,默认值为7200s(2小时) net.ipv4.tcp_keepalive_probes = 3 #在tcp_keepalive_time之后,没有接收到对方确认,继续发送保活探测包次数,默认值为9(次) net.ipv4.tcp_keepalive_intvl =15 #KeepAlive探测包的发送间隔,默认值为75s net.ipv4.tcp_max_tw_buckets = 36000 #Nginx 之类的中间代理一定要关注这个值,因为它对你的系统起到一个保护的作用,一旦端口全部被占用,服务就异常了。 tcp_max_tw_buckets 能帮你降低这种情况的发生概率,争取补救时间。 net.ipv4.tcp_tw_reuse = 1 #只对客户端起作用,开启后客户端在1s内回收 net.ipv4.tcp_max_orphans = 327680 #这个值表示系统所能处理不属于任何进程的socket数量,当我们需要快速建立大量连接时,就需要关注下这个值了。 net.ipv4.tcp_orphan_retries = 3 #出现大量fin-wait-1 #首先,fin发送之后,有可能会丢弃,那么发送多少次这样的fin包呢?fin包的重传,也会采用退避方式,在2.6.358内核中采用的是指数退避,2s,4s,最后的重试次数是由tcp_orphan_retries来限制的。 net.ipv4.tcp_syncookies = 1 #tcp_syncookies是一个开关,是否打开SYN Cookie功能,该功能可以防止部分SYN攻击。tcp_synack_retries和tcp_syn_retries定义SYN的重试次数。 net.ipv4.tcp_max_syn_backlog = 16384 #进入SYN包的最大请求队列.默认1024.对重负载服务器,增加该值显然有好处. net.ipv4.ip_conntrack_max = 65536 #表明系统将对最大跟踪的TCP连接数限制默认为65536 net.ipv4.tcp_max_syn_backlog = 16384 #指定所能接受SYN同步包的最大客户端数量,即半连接上限; net.ipv4.tcp_timestamps = 0 #在使用 iptables 做 nat 时,发现内网机器 ping 某个域名 ping 的通,而使用 curl 测试不通, 原来是 net.ipv4.tcp_timestamps 设置了为 1 ,即启用时间戳 net.core.somaxconn = 16384 #Linux中的一个kernel参数,表示socket监听(listen)的backlog上限。什么是backlog呢?backlog就是socket的监听队列,当一个请求(request)尚未被处理或建立时,他会进入backlog。而socket server可以一次性处理backlog中的所有请求,处理后的请求不再位于监听队列中。当server处理请求较慢,以至于监听队列被填满后,新来的请求会被拒绝。

所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

reboot lsmod | grep --color=auto -e ip_vs -e nf_conntrack [root@k8s-master01 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack ip_vs_ftp 16384 0 nf_nat 32768 1 ip_vs_ftp ip_vs_sed 16384 0 ip_vs_nq 16384 0 ip_vs_fo 16384 0 ip_vs_sh 16384 0 ip_vs_dh 16384 0 ip_vs_lblcr 16384 0 ip_vs_lblc 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs_wlc 16384 0 ip_vs_lc 16384 0 ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp nf_conntrack 143360 2 nf_nat,ip_vs nf_defrag_ipv6 20480 1 nf_conntrack nf_defrag_ipv4 16384 1 nf_conntrack libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vsreboot lsmod | grep --color=auto -e ip_vs -e nf_conntrack [root@k8s-master01 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack ip_vs_ftp 16384 0 nf_nat 32768 1 ip_vs_ftp ip_vs_sed 16384 0 ip_vs_nq 16384 0 ip_vs_fo 16384 0 ip_vs_sh 16384 0 ip_vs_dh 16384 0 ip_vs_lblcr 16384 0 ip_vs_lblc 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs_wlc 16384 0 ip_vs_lc 16384 0 ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp nf_conntrack 143360 2 nf_nat,ip_vs nf_defrag_ipv6 20480 1 nf_conntrack nf_defrag_ipv4 16384 1 nf_conntrack libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vsreboot lsmod | grep --color=auto -e ip_vs -e nf_conntrack [root@k8s-master01 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack ip_vs_ftp 16384 0 nf_nat 32768 1 ip_vs_ftp ip_vs_sed 16384 0 ip_vs_nq 16384 0 ip_vs_fo 16384 0 ip_vs_sh 16384 0 ip_vs_dh 16384 0 ip_vs_lblcr 16384 0 ip_vs_lblc 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs_wlc 16384 0 ip_vs_lc 16384 0 ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp nf_conntrack 143360 2 nf_nat,ip_vs nf_defrag_ipv6 20480 1 nf_conntrack nf_defrag_ipv4 16384 1 nf_conntrack libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

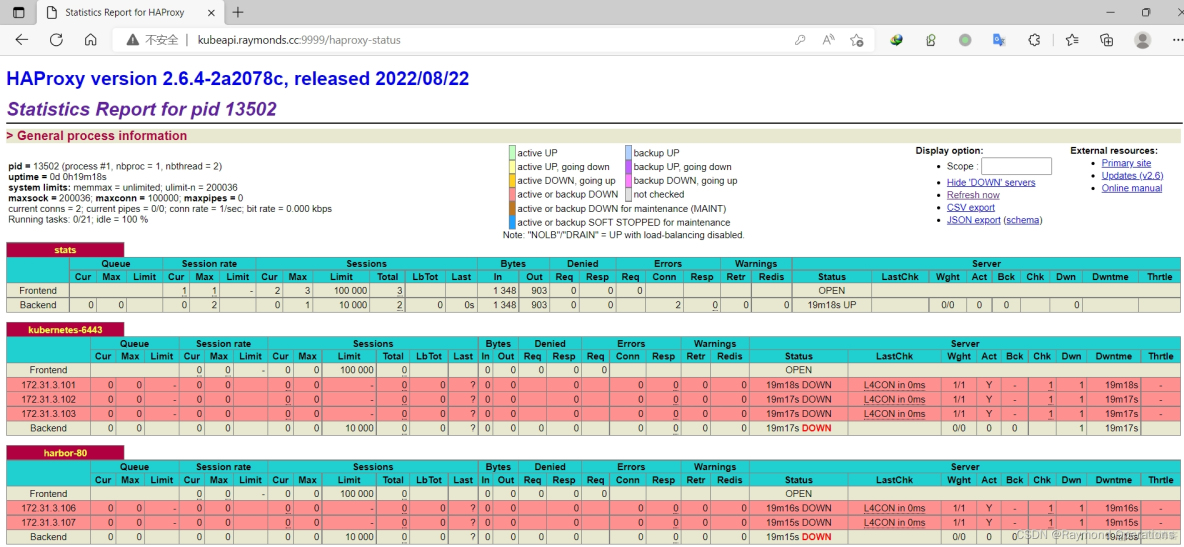

3.高可用组件安装

(注意:如果不是高可用集群,haproxy和keepalived无需安装)

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

阿里云需要设置:slb -> haproxy -> apiserver

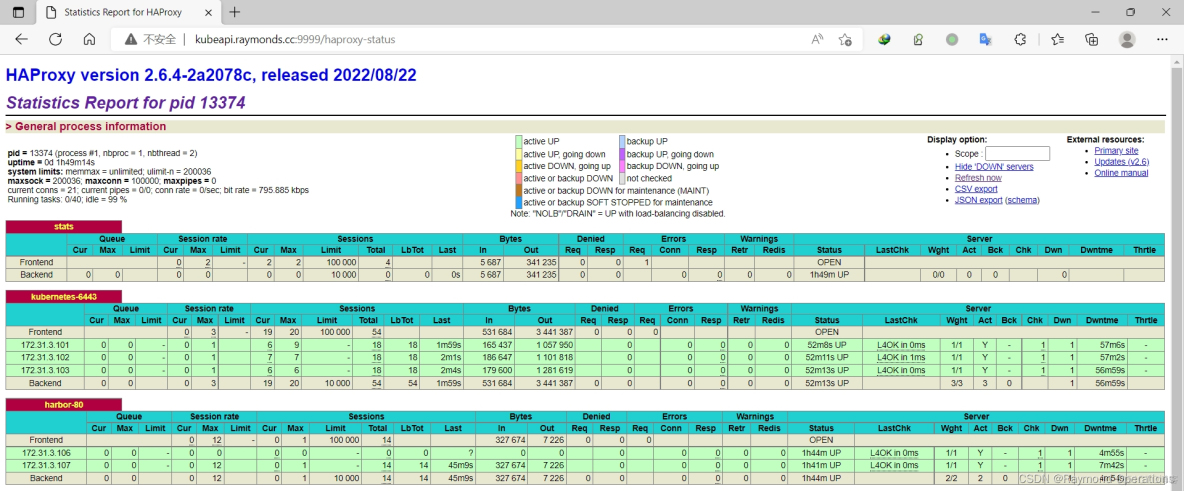

3.1 安装haproxy

在ha01和ha02安装HAProxy:

[root@k8s-ha01 ~]# cat install_haproxy.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2022-04-14 #FileName: install_haproxy.sh #Description: The test script #Copyright (C): 2021 All rights reserved #********************************************************************************************* SRC_DIR=/usr/local/src COLOR="echo -e \\033[01;31m" END='\033[0m' CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'` #lua下载地址:http://www.lua.org/ftp/lua-5.4.4.tar.gz LUA_FILE=lua-5.4.4.tar.gz #haproxy下载地址:https://www.haproxy.org/download/2.6/src/haproxy-2.6.4.tar.gz HAPROXY_FILE=haproxy-2.6.4.tar.gz HAPROXY_INSTALL_DIR=/apps/haproxy STATS_AUTH_USER=admin STATS_AUTH_PASSWORD=123456 VIP=172.31.3.188 MASTER01=172.31.3.101 MASTER02=172.31.3.102 MASTER03=172.31.3.103 HARBOR01=172.31.3.106 HARBOR02=172.31.3.107 os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } check_file (){ cd ${SRC_DIR} ${COLOR}'检查Haproxy相关源码包'${END} if [ ! -e ${LUA_FILE} ];then ${COLOR}"缺少${LUA_FILE}文件,请把文件放到${SRC_DIR}目录下"${END} exit elif [ ! -e ${HAPROXY_FILE} ];then ${COLOR}"缺少${HAPROXY_FILE}文件,请把文件放到${SRC_DIR}目录下"${END} exit else ${COLOR}"相关文件已准备好"${END} fi } install_haproxy(){ [ -d ${HAPROXY_INSTALL_DIR} ] && { ${COLOR}"Haproxy已存在,安装失败"${END};exit; } ${COLOR}"开始安装Haproxy"${END} ${COLOR}"开始安装Haproxy依赖包"${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install gcc make gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel libtermcap-devel ncurses-devel libevent-devel readline-devel &> /dev/null else apt update &> /dev/null;apt -y install gcc make openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev libreadline-dev libsystemd-dev &> /dev/null fi tar xf ${LUA_FILE} LUA_DIR=`echo ${LUA_FILE} | sed -nr 's/^(.*[0-9]).([[:lower:]]).*/\1/p'` cd ${LUA_DIR} make all test cd ${SRC_DIR} tar xf ${HAPROXY_FILE} HAPROXY_DIR=`echo ${HAPROXY_FILE} | sed -nr 's/^(.*[0-9]).([[:lower:]]).*/\1/p'` cd ${HAPROXY_DIR} make -j ${CPUS} ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=${SRC_DIR}/${LUA_DIR}/src/ LUA_LIB=${SRC_DIR}/${LUA_DIR}/src/ PREFIX=${HAPROXY_INSTALL_DIR} make install PREFIX=${HAPROXY_INSTALL_DIR} [ $? -eq 0 ] && $COLOR"Haproxy编译安装成功"$END || { $COLOR"Haproxy编译安装失败,退出!"$END;exit; } cat > /lib/systemd/system/haproxy.service <<-EOF [Unit] Description=HAProxy Load Balancer After=syslog.target network.target [Service] ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/lib/haproxy/haproxy.pid ExecReload=/bin/kill -USR2 $MAINPID [Install] WantedBy=multi-user.target EOF [ -L /usr/sbin/haproxy ] || ln -s ../..${HAPROXY_INSTALL_DIR}/sbin/haproxy /usr/sbin/ &> /dev/null [ -d /etc/haproxy ] || mkdir /etc/haproxy &> /dev/null [ -d /var/lib/haproxy/ ] || mkdir -p /var/lib/haproxy/ &> /dev/null cat > /etc/haproxy/haproxy.cfg <<-EOF global maxconn 100000 chroot ${HAPROXY_INSTALL_DIR} stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin uid 99 gid 99 daemon pidfile /var/lib/haproxy/haproxy.pid log 127.0.0.1 local3 info defaults option http-keep-alive option forwardfor maxconn 100000 mode http timeout connect 300000ms timeout client 300000ms timeout server 300000ms listen stats mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth ${STATS_AUTH_USER}:${STATS_AUTH_PASSWORD} listen kubernetes-6443 bind ${VIP}:6443 mode tcp log global server ${MASTER01} ${MASTER01}:6443 check inter 3s fall 2 rise 5 server ${MASTER02} ${MASTER02}:6443 check inter 3s fall 2 rise 5 server ${MASTER03} ${MASTER03}:6443 check inter 3s fall 2 rise 5 listen harbor-80 bind ${VIP}:80 mode http log global balance source server ${HARBOR01} ${HARBOR01}:80 check inter 3s fall 2 rise 5 server ${HARBOR02} ${HARBOR02}:80 check inter 3s fall 2 rise 5 EOF cat >> /etc/sysctl.conf <<-EOF net.ipv4.ip_nonlocal_bind = 1 EOF sysctl -p &> /dev/null echo "PATH=${HAPROXY_INSTALL_DIR}/sbin:${PATH}" > /etc/profile.d/haproxy.sh systemctl daemon-reload systemctl enable --now haproxy &> /dev/null systemctl is-active haproxy &> /dev/null && ${COLOR}"Haproxy 服务启动成功!"${END} || { ${COLOR}"Haproxy 启动失败,退出!"${END} ; exit; } ${COLOR}"Haproxy安装完成"${END} } main(){ os check_file install_haproxy } main [root@k8s-ha01 ~]# bash install_haproxy.sh [root@k8s-ha02 ~]# bash install_haproxy.sh[root@k8s-ha01 ~]# cat install_haproxy.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2022-04-14 #FileName: install_haproxy.sh #Description: The test script #Copyright (C): 2021 All rights reserved #********************************************************************************************* SRC_DIR=/usr/local/src COLOR="echo -e \\033[01;31m" END='\033[0m' CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'` #lua下载地址:http://www.lua.org/ftp/lua-5.4.4.tar.gz LUA_FILE=lua-5.4.4.tar.gz #haproxy下载地址:https://www.haproxy.org/download/2.6/src/haproxy-2.6.4.tar.gz HAPROXY_FILE=haproxy-2.6.4.tar.gz HAPROXY_INSTALL_DIR=/apps/haproxy STATS_AUTH_USER=admin STATS_AUTH_PASSWORD=123456 VIP=172.31.3.188 MASTER01=172.31.3.101 MASTER02=172.31.3.102 MASTER03=172.31.3.103 HARBOR01=172.31.3.106 HARBOR02=172.31.3.107 os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } check_file (){ cd ${SRC_DIR} ${COLOR}'检查Haproxy相关源码包'${END} if [ ! -e ${LUA_FILE} ];then ${COLOR}"缺少${LUA_FILE}文件,请把文件放到${SRC_DIR}目录下"${END} exit elif [ ! -e ${HAPROXY_FILE} ];then ${COLOR}"缺少${HAPROXY_FILE}文件,请把文件放到${SRC_DIR}目录下"${END} exit else ${COLOR}"相关文件已准备好"${END} fi } install_haproxy(){ [ -d ${HAPROXY_INSTALL_DIR} ] && { ${COLOR}"Haproxy已存在,安装失败"${END};exit; } ${COLOR}"开始安装Haproxy"${END} ${COLOR}"开始安装Haproxy依赖包"${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install gcc make gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel libtermcap-devel ncurses-devel libevent-devel readline-devel &> /dev/null else apt update &> /dev/null;apt -y install gcc make openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev libreadline-dev libsystemd-dev &> /dev/null fi tar xf ${LUA_FILE} LUA_DIR=`echo ${LUA_FILE} | sed -nr 's/^(.*[0-9]).([[:lower:]]).*/\1/p'` cd ${LUA_DIR} make all test cd ${SRC_DIR} tar xf ${HAPROXY_FILE} HAPROXY_DIR=`echo ${HAPROXY_FILE} | sed -nr 's/^(.*[0-9]).([[:lower:]]).*/\1/p'` cd ${HAPROXY_DIR} make -j ${CPUS} ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=${SRC_DIR}/${LUA_DIR}/src/ LUA_LIB=${SRC_DIR}/${LUA_DIR}/src/ PREFIX=${HAPROXY_INSTALL_DIR} make install PREFIX=${HAPROXY_INSTALL_DIR} [ $? -eq 0 ] && $COLOR"Haproxy编译安装成功"$END || { $COLOR"Haproxy编译安装失败,退出!"$END;exit; } cat > /lib/systemd/system/haproxy.service <<-EOF [Unit] Description=HAProxy Load Balancer After=syslog.target network.target [Service] ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/lib/haproxy/haproxy.pid ExecReload=/bin/kill -USR2 $MAINPID [Install] WantedBy=multi-user.target EOF [ -L /usr/sbin/haproxy ] || ln -s ../..${HAPROXY_INSTALL_DIR}/sbin/haproxy /usr/sbin/ &> /dev/null [ -d /etc/haproxy ] || mkdir /etc/haproxy &> /dev/null [ -d /var/lib/haproxy/ ] || mkdir -p /var/lib/haproxy/ &> /dev/null cat > /etc/haproxy/haproxy.cfg <<-EOF global maxconn 100000 chroot ${HAPROXY_INSTALL_DIR} stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin uid 99 gid 99 daemon pidfile /var/lib/haproxy/haproxy.pid log 127.0.0.1 local3 info defaults option http-keep-alive option forwardfor maxconn 100000 mode http timeout connect 300000ms timeout client 300000ms timeout server 300000ms listen stats mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth ${STATS_AUTH_USER}:${STATS_AUTH_PASSWORD} listen kubernetes-6443 bind ${VIP}:6443 mode tcp log global server ${MASTER01} ${MASTER01}:6443 check inter 3s fall 2 rise 5 server ${MASTER02} ${MASTER02}:6443 check inter 3s fall 2 rise 5 server ${MASTER03} ${MASTER03}:6443 check inter 3s fall 2 rise 5 listen harbor-80 bind ${VIP}:80 mode http log global balance source server ${HARBOR01} ${HARBOR01}:80 check inter 3s fall 2 rise 5 server ${HARBOR02} ${HARBOR02}:80 check inter 3s fall 2 rise 5 EOF cat >> /etc/sysctl.conf <<-EOF net.ipv4.ip_nonlocal_bind = 1 EOF sysctl -p &> /dev/null echo "PATH=${HAPROXY_INSTALL_DIR}/sbin:${PATH}" > /etc/profile.d/haproxy.sh systemctl daemon-reload systemctl enable --now haproxy &> /dev/null systemctl is-active haproxy &> /dev/null && ${COLOR}"Haproxy 服务启动成功!"${END} || { ${COLOR}"Haproxy 启动失败,退出!"${END} ; exit; } ${COLOR}"Haproxy安装完成"${END} } main(){ os check_file install_haproxy } main [root@k8s-ha01 ~]# bash install_haproxy.sh [root@k8s-ha02 ~]# bash install_haproxy.sh[root@k8s-ha01 ~]# cat install_haproxy.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2022-04-14 #FileName: install_haproxy.sh #Description: The test script #Copyright (C): 2021 All rights reserved #********************************************************************************************* SRC_DIR=/usr/local/src COLOR="echo -e \\033[01;31m" END='\033[0m' CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'` #lua下载地址:http://www.lua.org/ftp/lua-5.4.4.tar.gz LUA_FILE=lua-5.4.4.tar.gz #haproxy下载地址:https://www.haproxy.org/download/2.6/src/haproxy-2.6.4.tar.gz HAPROXY_FILE=haproxy-2.6.4.tar.gz HAPROXY_INSTALL_DIR=/apps/haproxy STATS_AUTH_USER=admin STATS_AUTH_PASSWORD=123456 VIP=172.31.3.188 MASTER01=172.31.3.101 MASTER02=172.31.3.102 MASTER03=172.31.3.103 HARBOR01=172.31.3.106 HARBOR02=172.31.3.107 os(){ OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release` } check_file (){ cd ${SRC_DIR} ${COLOR}'检查Haproxy相关源码包'${END} if [ ! -e ${LUA_FILE} ];then ${COLOR}"缺少${LUA_FILE}文件,请把文件放到${SRC_DIR}目录下"${END} exit elif [ ! -e ${HAPROXY_FILE} ];then ${COLOR}"缺少${HAPROXY_FILE}文件,请把文件放到${SRC_DIR}目录下"${END} exit else ${COLOR}"相关文件已准备好"${END} fi } install_haproxy(){ [ -d ${HAPROXY_INSTALL_DIR} ] && { ${COLOR}"Haproxy已存在,安装失败"${END};exit; } ${COLOR}"开始安装Haproxy"${END} ${COLOR}"开始安装Haproxy依赖包"${END} if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then yum -y install gcc make gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel libtermcap-devel ncurses-devel libevent-devel readline-devel &> /dev/null else apt update &> /dev/null;apt -y install gcc make openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev libreadline-dev libsystemd-dev &> /dev/null fi tar xf ${LUA_FILE} LUA_DIR=`echo ${LUA_FILE} | sed -nr 's/^(.*[0-9]).([[:lower:]]).*/\1/p'` cd ${LUA_DIR} make all test cd ${SRC_DIR} tar xf ${HAPROXY_FILE} HAPROXY_DIR=`echo ${HAPROXY_FILE} | sed -nr 's/^(.*[0-9]).([[:lower:]]).*/\1/p'` cd ${HAPROXY_DIR} make -j ${CPUS} ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=${SRC_DIR}/${LUA_DIR}/src/ LUA_LIB=${SRC_DIR}/${LUA_DIR}/src/ PREFIX=${HAPROXY_INSTALL_DIR} make install PREFIX=${HAPROXY_INSTALL_DIR} [ $? -eq 0 ] && $COLOR"Haproxy编译安装成功"$END || { $COLOR"Haproxy编译安装失败,退出!"$END;exit; } cat > /lib/systemd/system/haproxy.service <<-EOF [Unit] Description=HAProxy Load Balancer After=syslog.target network.target [Service] ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/lib/haproxy/haproxy.pid ExecReload=/bin/kill -USR2 $MAINPID [Install] WantedBy=multi-user.target EOF [ -L /usr/sbin/haproxy ] || ln -s ../..${HAPROXY_INSTALL_DIR}/sbin/haproxy /usr/sbin/ &> /dev/null [ -d /etc/haproxy ] || mkdir /etc/haproxy &> /dev/null [ -d /var/lib/haproxy/ ] || mkdir -p /var/lib/haproxy/ &> /dev/null cat > /etc/haproxy/haproxy.cfg <<-EOF global maxconn 100000 chroot ${HAPROXY_INSTALL_DIR} stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin uid 99 gid 99 daemon pidfile /var/lib/haproxy/haproxy.pid log 127.0.0.1 local3 info defaults option http-keep-alive option forwardfor maxconn 100000 mode http timeout connect 300000ms timeout client 300000ms timeout server 300000ms listen stats mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth ${STATS_AUTH_USER}:${STATS_AUTH_PASSWORD} listen kubernetes-6443 bind ${VIP}:6443 mode tcp log global server ${MASTER01} ${MASTER01}:6443 check inter 3s fall 2 rise 5 server ${MASTER02} ${MASTER02}:6443 check inter 3s fall 2 rise 5 server ${MASTER03} ${MASTER03}:6443 check inter 3s fall 2 rise 5 listen harbor-80 bind ${VIP}:80 mode http log global balance source server ${HARBOR01} ${HARBOR01}:80 check inter 3s fall 2 rise 5 server ${HARBOR02} ${HARBOR02}:80 check inter 3s fall 2 rise 5 EOF cat >> /etc/sysctl.conf <<-EOF net.ipv4.ip_nonlocal_bind = 1 EOF sysctl -p &> /dev/null echo "PATH=${HAPROXY_INSTALL_DIR}/sbin:${PATH}" > /etc/profile.d/haproxy.sh systemctl daemon-reload systemctl enable --now haproxy &> /dev/null systemctl is-active haproxy &> /dev/null && ${COLOR}"Haproxy 服务启动成功!"${END} || { ${COLOR}"Haproxy 启动失败,退出!"${END} ; exit; } ${COLOR}"Haproxy安装完成"${END} } main(){ os check_file install_haproxy } main [root@k8s-ha01 ~]# bash install_haproxy.sh [root@k8s-ha02 ~]# bash install_haproxy.sh

3.2 安装keepalived

所有master节点配置KeepAlived健康检查文件:

[root@k8s-ha02 ~]# cat check_haproxy.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2022-01-09 #FileName: check_haproxy.sh #Description: The test script #Copyright (C): 2022 All rights reserved #********************************************************************************************* err=0 for k in $(seq 1 3);do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi[root@k8s-ha02 ~]# cat check_haproxy.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2022-01-09 #FileName: check_haproxy.sh #Description: The test script #Copyright (C): 2022 All rights reserved #********************************************************************************************* err=0 for k in $(seq 1 3);do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi[root@k8s-ha02 ~]# cat check_haproxy.sh #!/bin/bash # #********************************************************************************************** #Author: Raymond #Date: 2022-01-09 #FileName: check_haproxy.sh #Description: The test script #Copyright (C): 2022 All rights reserved #********************************************************************************************* err=0 for k in $(seq 1 3);do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi

在ha01和ha02节点安装KeepAlived,配置不一样,注意区分 [root@k8s-master01 pki]# vim /etc/keepalived/keepalived.conf ,注意每个节点的网卡(interface参数)

在ha01节点上安装keepalived-master: